Back to blog

AI-Generated Phishing vs Human Attacks: 2025 Risk Analysis

Written by

Brightside Team

Published on

Oct 24, 2025

In February 2024, a finance worker at Arup, the multinational engineering firm behind the Sydney Opera House and Beijing's Olympic Stadium, transferred $25 million to fraudsters after attending what appeared to be a legitimate video conference call with the company's CFO and senior leadership team. Every face on the screen was real. Every voice matched perfectly. The problem? All of them were AI-generated deepfakes created by attackers who had cloned executive voices and faces using publicly available footage.

By October 2025, AI-generated phishing has become the top enterprise email threat according to cybersecurity researchers, surpassing ransomware, insider risk, and traditional social engineering combined. Security teams report a staggering 1,265% surge in phishing attacks linked to generative AI since 2023, while the FBI has issued formal warnings that criminals now leverage artificial intelligence to orchestrate highly targeted campaigns with perfect grammar and contextual awareness. As attackers weaponize the same AI tools your organization uses for productivity, understanding the fundamental differences between AI-generated and human-created phishing attacks determines whether you detect threats or become the next headline.

What is AI-Generated Phishing?

AI-generated phishing refers to social engineering attacks created, personalized, or enhanced using artificial intelligence technologies, particularly large language models and generative AI tools. Unlike traditional phishing crafted by humans, these attacks leverage machine learning algorithms to analyze vast amounts of data including social media profiles, corporate websites, and leaked databases to generate hyper-personalized, grammatically flawless messages at unprecedented scale.

These attacks include:

Text-based emails with perfect grammar and contextual awareness

Voice cloning (vishing) that replicates executive speech patterns

Deepfake videos for video conference fraud

Polymorphic campaigns where each message varies to evade detection

Understanding AI-generated phishing allows security teams to recalibrate their detection systems, training programs, and incident response protocols. Traditional red flags like spelling errors and awkward phrasing have been eliminated by AI, forcing organizations to adopt behavioral analysis, OSINT vulnerability assessment, and adaptive simulation training rather than relying on static email filters. According to 2025 threat intelligence analysis, 82.6% of phishing emails now use some form of AI-generated content, with over 90% of polymorphic attacks leveraging large language models.

Common Misconceptions About AI-Generated Phishing

"AI phishing is just faster human phishing. Detection methods remain the same."

This assumption misses how fundamentally AI transforms attack methodology. While a human attacker might spend 30 minutes crafting a single spear-phishing email, AI tools like WormGPT and FraudGPT generate hundreds of contextually unique variations in the same timeframe.

More critically, these tools produce polymorphic campaigns where each email differs in subject lines, sender names, and content structure, rendering signature-based detection systems obsolete. IBM researchers demonstrated in 2024 that AI constructed a sophisticated phishing campaign in 5 minutes using 5 prompts. A task that took human security experts 16 hours.

"Employees can spot AI phishing by looking for grammar mistakes and generic greetings."

This belief is dangerously outdated. Large language models eliminate the linguistic tells that previously exposed phishing attempts, producing native-level grammar, natural tone, and culturally appropriate context.

AI systems scrape publicly available information from LinkedIn, corporate websites, and social media to inject authentic details into messages:

Job titles and reporting structures

Recent company events and acquisitions

Colleague names and relationships

Project-specific terminology

Academic research comparing AI-generated phishing with human-crafted versions found that AI-generated emails achieved a 54% click-through rate compared to just 12% for control emails in 2024 studies. When a manufacturing company's procurement team received emails referencing their actual vendor relationships and recent purchase orders, they couldn't distinguish the AI-generated attacks from legitimate correspondence.

"Our annual security training covers phishing basics, so we're protected against AI attacks."

Static, compliance-focused training programs designed for traditional phishing threats provide minimal defense against AI-generated attacks. Research tracking 12,511 employees at a U.S. financial technology firm found that generic training interventions showed no significant effect on click rates (p=0.450) or reporting rates (p=0.417) in 2025.

Effective protection requires continuous, adaptive training that exposes employees to AI-generated simulations matching real-world sophistication levels. Organizations conducting sustained, behavior-based phishing programs achieve failure rates around 1.5%, while those relying on annual training see negligible improvement over untrained populations according to Verizon's 2025 Data Breach Investigations Report.

How AI Transforms the Phishing Attack Lifecycle

AI doesn't simply automate existing phishing tactics. It revolutionizes every stage of the attack chain from initial target reconnaissance through message crafting, delivery optimization, and response handling. Artificial intelligence provides attackers with capabilities that mirror advanced persistent threat groups, but at a fraction of the cost and time investment.

Attackers now save 95% on campaign costs using LLMs, dramatically lowering barriers to entry and enabling massive scaling.

1. Reconnaissance and OSINT Exploitation

Traditional phishing relied on purchased email lists and generic templates. AI-powered reconnaissance tools systematically harvest open-source intelligence (OSINT) from multiple sources to build detailed target profiles:

Data Sources Exploited:

LinkedIn profiles for organizational hierarchies

Corporate websites for writing styles and terminology

GitHub repositories for technical staff identification

Conference speaker lists for executive schedules

Social media for personal interests and relationships

Advanced attackers feed this OSINT data into LLMs to generate messages that reference real projects, mimic executive communication styles, and exploit trust relationships. An AI system might identify that a finance employee recently connected with a new vendor on LinkedIn, then craft a fake invoice email referencing that exact relationship with perfect timing and context.

Real-World Case: A 2024 attack against a mid-sized healthcare organization used AI to scrape employee LinkedIn profiles, identifying 47 staff members who had recently completed cybersecurity certifications. The attackers then sent personalized "certificate verification" phishing emails that achieved a 38% click rate by exploiting the recency of the legitimate activity.

Platforms like Brightside's OSINT scanning engine identify these exact vulnerabilities before attackers do, mapping employee digital footprints across six categories: personal information, data leaks, online services, interests, social connections, and locations. Organizations can prioritize training based on actual exposure rather than generic risk assumptions.

2. Message Generation: Perfect Grammar and Polymorphic Variations

Large language models eliminate the linguistic indicators that traditionally exposed phishing attempts. AI-generated emails exhibit flawless grammar, appropriate tone, and cultural context whether mimicking urgent CEO directives or casual colleague requests.

Polymorphic generation represents the most significant evolution. Rather than sending identical emails to 1,000 targets, AI systems create 1,000 unique variations:

Different subject lines for each recipient

Varied body content and phrasing

Unique sender information and formatting

Personalized context based on target research

This variability defeats both signature-based email filters and user pattern recognition, as employees can't rely on warning messages from colleagues who received "the same suspicious email."

Real-World Case: A European logistics company's IT team received reports of suspicious payment requests and initially dismissed them as false positives because each message varied substantially in wording and formatting. Only after the third fraudulent transfer did forensic analysis reveal an AI-generated campaign with over 200 unique variants targeting different departments.

According to 2024 threat intelligence data, 73.8% of phishing emails used some form of AI, rising to over 90% for polymorphic attacks. The shift from static to variable attacks fundamentally breaks traditional defense models built on pattern recognition.

3. Multi-Channel Attacks Beyond Email

AI-powered phishing now extends across multiple attack vectors, creating coordinated campaigns that defeat single-layer verification protocols.

Attack Vector | Technology | Impact Statistics |

|---|---|---|

Vishing | AI voice cloning from 3 seconds of audio | 442% surge in H2 2024 |

Deepfake Video | Multi-participant fake video calls | 19% increase Q1 2025 |

Smishing | SMS attacks bypassing email filters | Steady growth 2020-2025 |

Quishing | QR codes in emails | 8,878 detected emails in 3 months |

Voice cloning technology can replicate executive voices using as little as 3 seconds of audio obtained from earnings calls, podcasts, or conference presentations. The case of a UK energy firm that lost $243,000 demonstrated that AI-generated voices can capture subtle accents, speech patterns, and emotional inflections that make verification nearly impossible.

Real-World Case: Finance teams now face coordinated attacks where an initial phishing email establishes context, followed by a deepfake voice call from the "CFO" referencing that email to authorize wire transfers. When a pharmaceutical company's accounts payable team received both an email and a follow-up call using their CEO's cloned voice, the combination of channels created urgency and authenticity that bypassed their usual approval workflows.

4. Adaptive Evasion in Real-Time

Advanced AI phishing systems incorporate feedback loops that analyze which messages successfully bypass filters and which get flagged. When a particular email variant triggers spam filters, the AI automatically adjusts:

Language patterns and word choice

Formatting and layout structure

Attachment types and file names

Sender addresses and domain variations

Where human attackers might take days to pivot after detection, AI systems adjust in hours or minutes. A financial services company blocked an initial AI phishing wave targeting their customer service representatives in March 2024, only to face a modified version six hours later using completely different linguistic patterns and sender addresses.

5. Scale and Economics Revolution

Traditional spear-phishing campaigns required significant human labor for researching targets, crafting personalized messages, and managing responses. AI collapses these economics, enabling spear-phishing at mass scale without the labor constraints.

Cost Comparison:

Campaign Type | Cost per Target | Personalization Level | Speed |

|---|---|---|---|

Human Spear Phishing | $50-200/hour | 10-50 targets | 1-2 emails/hour |

AI Mass Personalization | Near-zero marginal | 10,000+ targets | 100+ emails/hour |

Attackers can now generate 10,000 unique, highly personalized emails targeting mid-level employees across hundreds of organizations for roughly the cost of a single traditional spear-phishing campaign. Research on 2024 attack patterns found that spear phishing methods in bulk campaigns cost 20 times more per victim but generate 40 times greater returns for attackers.

Real-World Case: A 2024 campaign targeting 800 small accounting firms used AI to generate customized tax deadline reminder emails referencing each firm's specific state registration details and recent public filings. The attacks achieved a 27% click rate by providing perfect local context that appeared impossible for mass campaigns.

6. Human vs. AI Phishing Comparison

Factor | Human Attackers | AI-Powered Attacks |

|---|---|---|

Speed | 1-2 emails per hour | 100+ emails per hour |

Scale | 10-50 targeted individuals | 10,000+ with equal personalization |

Language Quality | Regional limitations | Native-level fluency in 50+ languages |

Adaptation | Days to weeks after detection | Hours after detection |

Cost | $50-200/hour labor | Near-zero marginal cost |

Effectiveness Trend | Stable | 55% improvement 2023-2025 |

The critical insight isn't that AI phishing is marginally more effective. AI democratizes advanced spear-phishing capabilities, making APT-level personalization accessible to low-skill criminals with limited resources.

Top 5 AI-Powered Phishing Simulation Platforms for 2025

As AI transforms the threat landscape, simulation platforms must evolve beyond static template libraries to include AI-generated attacks, deepfake training, and OSINT-based personalization. Organizations implementing behavior-based phishing training see 50% reduction in actual phishing-related incidents over 12 months according to 2025 security awareness studies.

ROI calculations based on IBM's Cost of a Data Breach Report show that with average breach costs of $4.88 million and improvement rates of 30-60%, even modest training investments generate 5-10× returns.

Brightside AI: OSINT-Powered, Hyper-Personalized Security Training

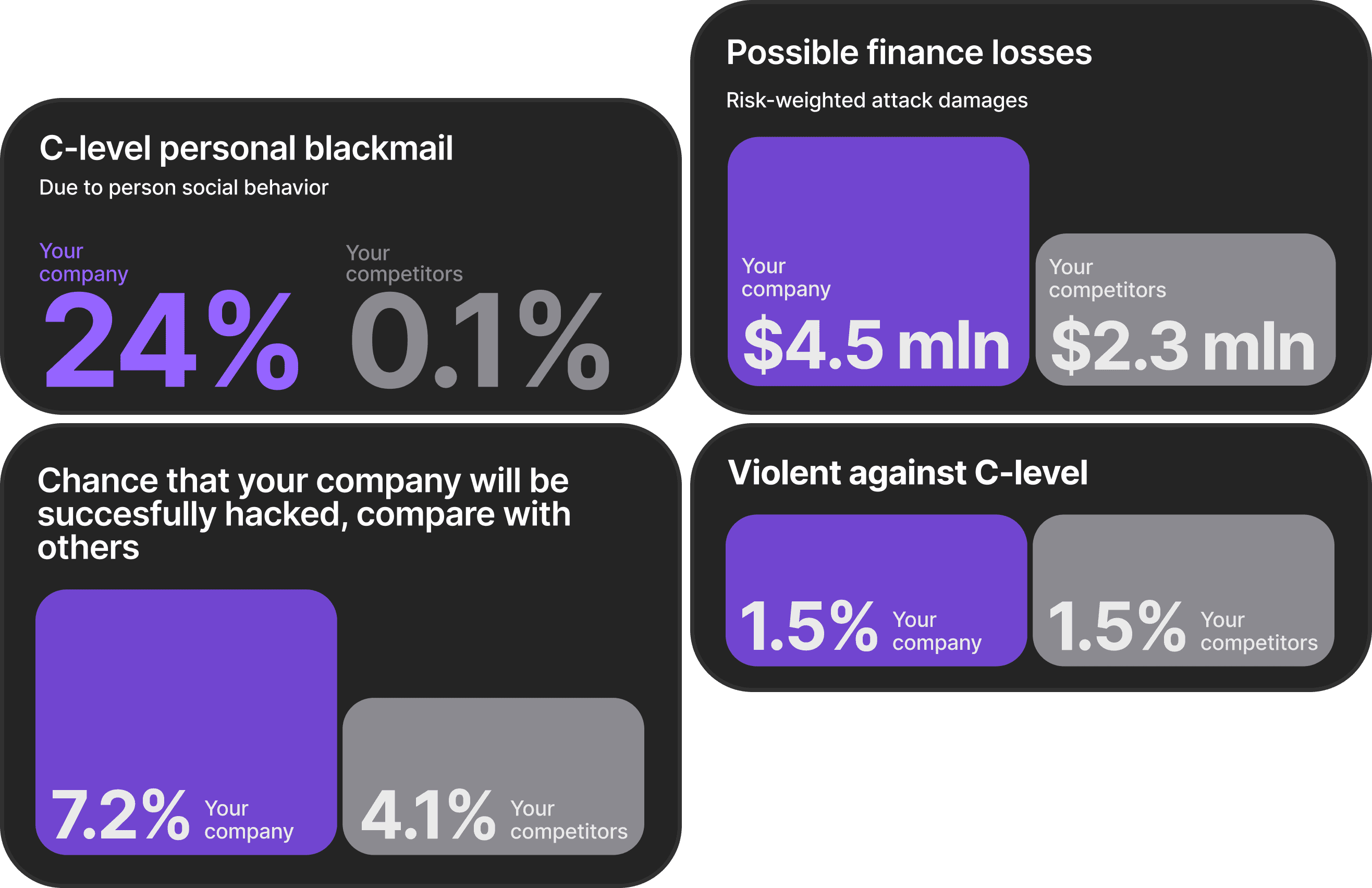

Brightside takes a fundamentally different approach by identifying your actual vulnerabilities before simulating attacks. Unlike competitors that simulate generic threats, Brightside conducts comprehensive digital footprint scans across six categories to calculate individualized vulnerability scores for each employee.

Key Differentiators:

Feature | Capability | Benefit |

|---|---|---|

OSINT Vulnerability Scoring | Scans 6 data categories including personal info, data leaks, online services | Risk-based training prioritization |

AI-Generated Simulations | Uses actual employee OSINT data to create realistic attacks | Training mirrors real threats you face |

Multi-Channel Coverage | Email, vishing, deepfake | Comprehensive attack vector preparation |

Privacy-First Architecture | Aggregate metrics without individual exposure | GDPR compliance with employee trust |

Brighty Privacy Companion | Brighty helps manage digital footprint and explains basics of personal privacy and security | Sustained engagement and retention |

Template Simulations | Big library of simulation templates in addition to AI-Generated simulations | Templates aligned to NIST Phish Scale for comprehensive training |

The AI engine generates phishing simulations using the same OSINT data that real attackers would exploit. It references employees' actual LinkedIn connections, recent company events, and exposed credentials found in dark web scans. This creates the most realistic training scenarios available because simulations mirror the exact threats your organization faces.

Ideal for: Organizations prioritizing proactive risk reduction through real vulnerability assessment; enterprises in highly regulated industries requiring privacy-by-design approaches; companies facing sophisticated spear-phishing threats targeting specific employee roles.

KnowBe4: Extensive Template Library

KnowBe4 offers the largest content library with 37,000+ phishing templates and frequent updates tied to current events. The Phish Alert Button provides simple user reporting with automated response workflows.

Strengths:

Largest template variety for diverse testing scenarios

Strong compliance coverage (HIPAA, PCI DSS)

Mature integration with Microsoft 365, Active Directory, SIEM

Limitations:

Training content criticized as "cartoonish" by enterprise users

Content fatigue from recognizable vendor template markers

Admin console complexity with steep learning curve

No OSINT vulnerability assessment or personalization

Best for: Organizations prioritizing compliance documentation and broad template variety over measurable behavioral adaptation.

Proofpoint Security Awareness Training: Integrated Email Suite

Proofpoint's strength lies in seamless integration with their email security gateway for unified threat correlation. Strong threat intelligence connects real-world attacks detected by their email filters to training content.

Strengths:

Seamless email security gateway integration

Enterprise-scale deployment for 10,000+ employees

Real-time threat intelligence correlation

Limitations:

Interface sluggishness and unintuitive navigation

Training content described as "stale and not engaging"

Reporting requires manual export to Excel/Power BI

60% false positive rate on TAP service detections

Best for: Organizations already using Proofpoint email security seeking vendor consolidation despite training platform limitations.

Hoxhunt: Behavior-Based Gamification

Hoxhunt employs adaptive difficulty algorithms that automatically adjust simulation complexity based on individual performance. Strong gamification with points, leaderboards, and recognition programs drives engagement.

Strengths:

Adaptive difficulty matching employee skill levels

60% reporting rates after one year vs. 7% for quarterly training

Superior engagement through gamification

Limitations:

Higher price point than compliance-focused alternatives

Smaller template library than KnowBe4

Limited OSINT integration for vulnerability assessment

Best for: Organizations focusing on measurable behavior change; enterprises willing to invest premium pricing for superior engagement.

Adaptive Security: AI-Driven Spear Phishing Focus

Adaptive Security specializes in AI-generated phishing detection training with real-time threat intelligence. Detailed analytics track improvement across simulation difficulty levels using validated frameworks.

Strengths:

Specialized AI-generated phishing focus

NIST Phish Scale integration for validated measurement

Real-time threat intelligence updates

Limitations:

Narrower scope than comprehensive awareness suites

Smaller market presence than established vendors

Limited multi-channel simulation beyond email

Best for: Organizations specifically concerned about AI-generated threats seeking specialized training.

From Detection to Vulnerability-Driven Resilience

Traditional phishing defense relies on detection by building better email filters, analyzing message headers, and training users to spot suspicious indicators. This reactive model fails against AI-generated threats that exhibit no linguistic tells and adapt faster than signature databases update.

The paradigm shift requires moving from "detect and block" to "assess and reduce vulnerability."

Understanding Actual Exposure

Organizations must first answer critical questions:

Which employees have credentials in public breaches?

Whose LinkedIn profiles expose organizational hierarchies?

What personal information enables convincing social engineering?

Which departments face the highest targeted attack risk?

OSINT-powered vulnerability assessment answers these questions, enabling risk-based training prioritization rather than generic awareness campaigns. A manufacturing company reduced phishing susceptibility by 67% after implementing vulnerability-driven training based on employee digital footprint analysis, focusing intensive simulations on the 23% of staff with the highest exposure scores.

Measurable Outcomes

Organizations adopting this approach achieve quantifiable improvements:

Metric | Industry Baseline | Vulnerability-Driven Programs |

|---|---|---|

Actual Incidents | Baseline | 50% reduction over 12 months |

Reporting Rate | 9-20% | 60% in sustained programs |

Failure Rate | 15-30% | 1.5% with continuous training |

Training Recency Impact | N/A | 4× higher reporting when trained within 30 days |

Employees trained within the last 30 days are 4× more likely to report phishing emails than those trained earlier, demonstrating that recency, frequency, and relevance matter more than content volume.

How Brightside Enables Transformation

Brightside enables this transformation through a four-pillar approach:

OSINT Vulnerability Scanning: Identifies actual employee exposure across six categories before training begins

AI-Generated Personalized Simulations: Creates attacks using the same data real attackers would exploit

Continuous Micro-Learning: Brighty helps reduce company attack surface by helping employees reduce vulnerabilities in their digital footprint and explains basics of personal privacy and security

Gamified Courses: Brightside AI provides courses covering a wide variety of topics from recognizing basic phishing to vishing and deepfakes, engaging employees with gamification

This continuous learning model addresses research showing training effects fade after 4 months without reinforcement, ensuring sustained behavioral improvement rather than temporary compliance.

Ready to shift from reactive detection to proactive vulnerability reduction? Discover how Brightside's OSINT-powered approach identifies your organization's actual exposure and delivers training that changes behavior, not just checks compliance boxes.

Start your free risk assessment

Our OSINT engine will reveal what adversaries can discover and leverage for phishing attacks.

FAQs About AI-Generated Phishing

What's the goal of AI-powered phishing simulations compared to traditional templates?

Traditional phishing simulations use static templates that may or may not reflect threats relevant to your organization. AI-powered simulations generate attacks based on your employees' actual digital footprints and vulnerability patterns, using the same data real attackers would exploit through OSINT reconnaissance.

Key Differences:

Traditional Templates | AI-Powered Simulations |

|---|---|

Generic scenarios | Personalized to actual vulnerabilities |

Static content | Dynamic, polymorphic variations |

Random targeting | Risk-based prioritization |

Limited relevance | Mirrors real threats you face |

If OSINT scans reveal that your finance team has credentials exposed in public breaches, AI simulations can generate credential-harvesting attacks specifically targeting those employees with references to their actual compromised accounts. Research on personalized, adaptive simulations shows 40% reduction in susceptibility compared to non-personalized training.

How often should organizations run phishing simulations in the AI threat era?

Research consistently shows that training effects fade after 4 months without reinforcement and disappear entirely after 6 months. For AI-generated threats that evolve weekly, annual or quarterly training cycles provide inadequate protection.

Evidence-Based Best Practice:

Micro-simulations every 10-14 days

Brief, relevant scenarios delivered continuously

Regular exposure creates habit formation

Maintains vigilance through retrieval practice

Organizations running weekly or bi-weekly simulations achieve 50-60% improvement in reporting rates and incident reduction, while those conducting quarterly training show negligible improvement over untrained populations according to 2025 behavioral security research.

What happens if employees fail phishing simulations repeatedly?

Employee failure patterns require diagnostic analysis rather than punitive responses. Research on phishing vulnerability reveals that personality traits, cognitive load, time pressure, and contextual factors significantly influence susceptibility.

Effective Response Framework:

Identify Serial Clickers: Track employees clicking 3+ consecutive campaigns

Diagnostic Assessment: Analyze failure patterns and contexts

Targeted Intervention: Provide role-based, personalized coaching

Vulnerability Investigation: Check if digital footprint creates genuine high-risk exposure

Supportive Environment: Frame as learning opportunity, not discipline

Punitive approaches like public shaming or performance consequences create fear-based cultures that reduce reporting and drive resistance. One healthcare organization switched from punitive to supportive approaches and increased reporting rates by 340% within six months by celebrating reporters rather than punishing clickers.

How does AI-generated phishing training improve defense against real attacks?

AI-generated phishing training works through exposure therapy and behavioral conditioning. By regularly confronting employees with realistic, personalized simulations, training retrains reflexive responses rather than just adding knowledge.

Why Realism Matters:

Median time to click: 21 seconds (faster than conscious analysis)

Generic templates train detection of generic threats only

AI-generated simulations using actual OSINT data create "near-miss" experiences

Practice conditions must match performance conditions for transfer

Organizations using AI-generated simulations matching real threat sophistication demonstrate 50% reduction in actual phishing incidents compared to template-based approaches.

Can AI-generated deepfake voice and video attacks be detected through training?

Technical detection of high-quality deepfakes remains imperfect, making behavioral verification protocols essential. Training must shift from "spot the fake" to "verify the request" regardless of how convincing the communication appears.

Effective Deepfake Defense Training:

Callback Procedures: Verify urgent requests by calling known numbers, not provided numbers

Pre-Established Safe Words: Create verbal authentication codes for high-stakes requests

Multi-Person Authorization: Require dual approval for large transfers or sensitive actions

Question Authority: Create permission to verify even senior executive requests when unusual

Organizations implementing vishing simulation programs report 65% improvement in verification behavior during voice-based attack scenarios according to 2024 training effectiveness studies. The goal isn't perfect detection but creating friction that breaks the social engineering flow.

How do we measure ROI on AI-powered phishing simulation platforms?

Calculate ROI using this validated formula:

ROI = (Expected Loss × Improvement Rate - Platform Cost) / Platform Cost

Components:

Expected Loss: Industry average breach cost ($1.6M-$3.7M) × (your employees / benchmark employees)

Improvement Rate: Conservative 30%, Moderate 50%, Optimistic 60%

Platform Cost: Annual licensing and implementation

Example for 300-Employee Organization:

Component | Calculation | Result |

|---|---|---|

Expected Loss | $1.6M × (300/1,000) | $480,000/year |

Improvement | 50% of expected loss | $240,000 protection value |

Platform Cost | Annual licensing | $40,000/year |

ROI | ($240K - $40K) / $40K | 500% |

Beyond breach prevention, measure operational gains including incident response costs, help desk ticket reduction from fewer successful attacks, and security team productivity improvements from automated workflows.

What's the first step in implementing vulnerability-driven phishing training?

Begin with comprehensive OSINT assessment to establish your baseline risk profile rather than making assumptions about generic risk.

Implementation Roadmap:

Conduct OSINT Vulnerability Scan

Map employee digital footprints across 6 categories

Identify exposed credentials in public breaches

Calculate individualized vulnerability scores

Establish Baseline Metrics

Current click rates by department

Reporting rates and time-to-report

High-risk employee concentration

Deploy Risk-Based Training

Prioritize high-risk individuals for intensive simulations

Use AI-generated attacks targeting actual vulnerabilities

Establish 10-14 day recurring schedules

Measure and Adjust

Track behavioral improvement over time

Refine simulations based on performance data

Maintain continuous learning cadence

The transformation from compliance-based awareness to vulnerability-driven resilience begins with understanding your actual exposure. Book a call → to see how Brightside's OSINT engine maps your organization's digital footprint and identifies hidden risk before attackers do.

The Path Forward

The choice between AI-generated and human-created phishing attacks isn't binary. Both exist simultaneously, but AI fundamentally changes the scale, sophistication, and economics of attacks in ways that render traditional defenses insufficient.

Organizations that continue relying on annual training, static email filters, and generic awareness campaigns face adversaries operating with APT-level capabilities at commodity prices.

The Modern Defense Strategy:

Assess actual vulnerabilities through OSINT scanning

Deploy AI-generated simulations mirroring real threats

Train continuously rather than annually

Measure behavioral change, not content completion

The organizations that adapt to this reality will build genuine resilience. Those that don't will become cautionary tales in future case studies.