Back to blog

Deepfake CEO Fraud: $50M Voice Cloning Threat CFOs

Written by

Brightside Team

Published on

Oct 19, 2025

In March 2025, a finance director at a multinational firm in Singapore joined what seemed like a routine Zoom call with senior leadership. The CFO was there. Other executives appeared on screen. Everyone looked right. Everyone sounded right. The finance director listened to the urgent request for a $499,000 fund transfer and authorized it.

There was just one problem. None of those executives were real.

Every face on that video call was a deepfake. Every voice was artificially generated. The entire meeting was fabricated using AI technology and publicly available media of the actual executives. By the time the company discovered the fraud, the money had vanished into criminal accounts.

This isn't science fiction. This is the new reality of corporate fraud in 2025. Deepfake attacks against businesses surged 3,000% in 2023. Voice cloning fraud specifically rose 680% in the past year. The average loss per deepfake fraud incident now exceeds $500,000. Large enterprises lose an average of $680,000 per attack.

Traditional security controls were built for a world where seeing and hearing meant believing. That world no longer exists. AI technology can now clone voices using just three seconds of audio. Video deepfakes convincingly replicate facial movements, body language, and speaking patterns. The technology improves daily while becoming easier to access and cheaper to deploy.

Deepfakes are AI-generated synthetic media that mimic real people by replicating their appearance, voice, and mannerisms. Voice cloning analyzes voice samples to recreate someone's unique vocal characteristics, allowing attackers to generate fake speech that sounds identical to the target. CEO fraud, also called business email compromise, refers to attacks where criminals impersonate executives to manipulate employees into authorizing fraudulent transactions.

Finance teams face the greatest risk. Unlike other departments, they can move money directly. They have authority to approve wire transfers and payment requests. They handle urgent transactions regularly. Attackers know this, which is why CFOs and finance directors have become primary targets for deepfake fraud.

What Are Deepfakes and How Do They Target Finance Teams?

How Does Deepfake Technology Actually Work?

The technology behind deepfakes sounds complex but the concept is straightforward. AI models analyze samples of someone's voice or appearance and learn the mathematical patterns that make them unique. Once trained, these models can generate new content that looks and sounds like the target person saying or doing things they never actually did.

For voice cloning, the process requires surprisingly little source material. Modern AI tools can clone a voice using just three seconds of clear audio. Higher quality clones that capture subtle vocal characteristics might need 10 to 30 seconds of recording. That's it. Three to thirty seconds is all an attacker needs.

Where do they get these voice samples? From the same sources anyone can access. Quarterly earnings calls that companies publish on investor relations pages. Conference presentations uploaded to YouTube. Media interviews and podcast appearances. LinkedIn video posts. Company promotional videos. Every time a CFO participates in these normal business activities, they provide free training data for potential attackers.

The attacker downloads these recordings, isolates the voice, removes background noise, and feeds the clean audio into AI voice synthesis software. The model analyzes pitch, tone, speech patterns, accent, and unique vocal characteristics. Within minutes, it can generate synthetic speech in that person's voice saying anything the attacker types.

Video deepfakes follow a similar process but require slightly more source material. The AI needs to see facial movements from different angles and in different lighting conditions. Conference videos, media appearances, and social media content provide plenty of reference material. The technology can then create synthetic video showing the person's face with synchronized lip movements, natural expressions, and realistic body language.

Why Are Finance Teams the Primary Target?

Finance operations present attackers with the shortest path to money. A successful attack against the IT department might compromise systems or steal data. A successful attack against finance immediately transfers cash to criminal accounts.

Think about what finance teams can do. They initiate wire transfers worth millions. They approve supplier payments. They process payroll. They handle currency exchanges. They authorize transactions without needing approval from multiple departments or going through complex technical processes. Just authorization from the right person, and the money moves.

Attackers understand this direct access to funds. They also understand the urgency culture in finance operations. Payroll must process on time. Supplier payments have deadlines. Deal closings require immediate wire transfers. Currency hedging needs split-second decisions. This constant time pressure creates an environment where "the CEO needs this transfer done now for a confidential acquisition" doesn't sound unusual.

The public profile requirements for finance leadership create additional vulnerability. CFOs participate in earnings calls every quarter. They speak at investor conferences. They give media interviews about financial results. They maintain active LinkedIn profiles for professional networking. This visibility is necessary for the role, but it also provides attackers with extensive audio and video samples for deepfake creation.

Research shows the financial stakes are enormous. Average losses from deepfake fraud now exceed $500,000 per incident. Large enterprises face average losses of $680,000. Individual cases have resulted in losses ranging from $243,000 to $50 million. These aren't small-scale fraud attempts. These are precision attacks designed to maximize payouts.

What Are the Real Cases of Deepfake CEO Fraud?

The Hong Kong Deepfake Video Conference: $39 Million Loss

The case that shocked the business world happened in Hong Kong in early 2024. A finance worker at a multinational company received a message from someone claiming to be the company's UK-based CFO. The message described an urgent and confidential transaction that required immediate attention.

The finance worker, trained to verify unusual requests, suggested a video call to confirm the request. This is exactly what security experts recommend. If something seems suspicious, see and hear the person making the request. The video call was arranged.

Multiple people joined the Zoom call. The CFO was there. Several other senior executives from the company appeared on screen. Everyone looked authentic. Facial movements matched their speech patterns. Voices sounded exactly right. Body language appeared natural. The finance worker saw familiar faces and heard familiar voices discussing a legitimate-sounding transaction.

Following what seemed like proper verification, the finance worker authorized the transfer. The amount: HK$200 million, approximately $25.6 million, with some reports citing total losses reaching $39 million.

The shocking truth emerged later. Every single person on that video call was a deepfake. The entire multi-person meeting was fabricated. Attackers had used publicly available footage of the real executives to train AI models that could generate synthetic video and audio. The technology was convincing enough to fool an employee who was actively trying to verify the request's legitimacy.

This case shattered assumptions about deepfake capabilities. Before Hong Kong, most people thought deepfakes were limited to one-on-one interactions or static images. This attack demonstrated that criminals could orchestrate complex multi-person video conferences with multiple AI-generated participants speaking and interacting naturally.

The Singapore $499,000 Deepfake Zoom Scam

Just a year later, in March 2025, another multinational firm in Singapore fell victim to a similar attack. A finance director received contact from someone posing as the company CFO. The imposter requested an urgent wire transfer for a confidential acquisition.

The attackers had learned from previous cases. They knew finance professionals had heard about deepfake threats and would verify unusual requests. So they proactively suggested a video call to discuss the transaction. This apparent willingness to verify through video created false confidence.

The Zoom call included multiple senior executives. These weren't simple photo cutouts or static images. The deepfakes featured synchronized facial movements, realistic voices that matched each executive's known speech patterns, and natural body language. The technology had advanced to the point where real-time interaction appeared genuine.

The finance director believed the request was legitimate and authorized the $499,000 transfer. The company discovered the fraud only after the real executives learned about a transaction they never requested. By then, the money had already moved through a series of accounts designed to hide the trail.

The $243,000 UK Energy Company Voice Deepfake

One of the earliest major deepfake fraud cases happened in 2019 at a UK energy company. This attack was simpler than the video conference frauds but equally effective.

The CEO of the company's UK subsidiary received a phone call. The caller sounded exactly like the CEO of their German parent company. The voice had the correct accent. The speech patterns matched. Even the conversational mannerisms were right. The caller requested an urgent wire transfer of $243,000 to a Hungarian supplier.

The UK CEO trusted what he heard. Why wouldn't he? The voice was perfect. The request aligned with the urgency culture in business operations. There seemed to be legitimate time pressure. He authorized the transfer.

Investigators later discovered that attackers had used AI voice synthesis to clone the German CEO's voice. They had likely obtained training audio from publicly available sources such as conference presentations or media interviews. The technology could generate synthetic speech in real-time, allowing natural conversation flow during the phone call.

This case was particularly significant because it happened in 2019. The technology then was less sophisticated than what's available now. If attackers could successfully execute this fraud six years ago, imagine what they can do with today's advanced AI tools.

Common Patterns Across All Cases

Every successful deepfake fraud shares certain characteristics. Attackers create urgency and confidentiality to pressure targets into bypassing normal verification protocols. They use publicly available media as source material, requiring no hacking or special access. They target finance staff who have authorization authority for payments.

Most importantly, traditional verification methods completely failed. Video calls didn't work. Voice recognition didn't work. The technology was convincing enough to fool experienced professionals who were actively trying to verify requests. This is the core problem: our old security assumptions don't apply anymore.

How Do Attackers Create Deepfake Voice Clones?

What Do Attackers Need to Clone a Voice?

The requirements for voice cloning are shockingly minimal. Commercial AI tools advertise the ability to clone voices using just three seconds of clear audio. Think about that. Three seconds. Most people speak about three words per second, meaning attackers need to hear you say fewer than ten words to replicate your voice.

For higher quality clones that capture subtle vocal characteristics like emotional inflection, breathing patterns, and laugh characteristics, attackers might use 10 to 30 seconds of audio. Even at the high end, that's barely longer than a single paragraph of speech.

CFOs and finance executives provide abundant voice samples without realizing it. Quarterly earnings calls typically last 30 to 60 minutes and are publicly archived on investor relations websites. Conference speaking engagements get recorded and posted on event websites or YouTube. Media interviews about financial results appear on news sites and podcasts. LinkedIn now supports video posts that many executives use for professional content.

Anyone can access these recordings. No hacking required. No special access needed. Just a web browser and a download button. The executives voluntarily provided this training data by participating in normal business activities that their roles require.

What Is the Technical Process?

The voice cloning process follows several steps, though the entire workflow can be completed in under an hour with the right tools.

First, attackers collect audio recordings of the target executive. They download earnings calls, conference videos, media interviews, or any other publicly available content. They use audio editing software to isolate the voice and remove background noise. The AI model performs better with clean voice samples, so this preprocessing step improves results.

Next comes the actual AI training. Voice cloning tools use neural network models that analyze vocal characteristics. They identify patterns in pitch variation, speech rhythm, accent features, and unique vocal signatures. Modern tools can complete this training in minutes using cloud computing resources.

Once trained, the model can generate synthetic speech. Attackers type whatever text they want the clone to say, and the AI produces audio that sounds like the target executive speaking those words. Advanced systems support real-time generation with minimal latency, meaning attackers can have live phone conversations using the cloned voice.

Some attackers test their clones before attempting fraud. They might call peripheral employees with innocuous questions, gauging whether people recognize the voice as their executive. This testing phase allows refinement and builds confidence in the clone's quality before risking a high-value fraud attempt.

How Realistic Are These Deepfakes?

The realism is frighteningly good. Research shows humans correctly identify high-quality deepfake videos only 24.5% of the time. That's worse than random guessing. For audio-only deepfakes, detection rates are slightly better but still inadequate, averaging around 60-70% accuracy.

The technology has improved dramatically. First-generation voice clones from 2018-2019 had noticeable robotic qualities. They struggled with emotional inflection and sounded slightly mechanical. Anyone paying close attention could detect artificial characteristics.

Current technology is far more sophisticated. Modern voice clones capture subtle details including natural breathing patterns, emotional variation, laugh characteristics, and spontaneous speech fillers like "um" and "ah." The synthesis quality often surpasses human detection capabilities.

Real-time generation adds another layer of danger. Attackers can now have natural conversations using cloned voices. If the target asks unexpected questions, the attacker can respond immediately with synthetic speech that sounds completely natural. There's no telltale delay or mechanical quality that might trigger suspicion.

What Makes Finance Operations Vulnerable to Deepfake Attacks?

How Do Current Verification Protocols Fail?

Most organizations still rely on verification methods that deepfakes have rendered obsolete.

Voice recognition fails completely against high-quality voice clones. Finance staff who regularly talk with executives learn to recognize their voices. This recognition provides a sense of security. The problem is that modern voice clones sound identical to the real person. There's nothing to recognize as fake.

Video call verification, once considered reliable, no longer works either. The Hong Kong case proved that multi-person video conferences can be entirely fabricated with convincing deepfake technology. Telling employees "if something seems suspicious, ask for a video call" now provides false security rather than actual protection.

Callback verification sounds good in theory but fails in practice without proper protocols. If employees call back using a number provided in the suspicious request, attackers simply answer with the deepfake voice. Unless the callback uses a verified, pre-stored contact number from the company directory, it proves nothing.

Email confirmation also falls short. Sophisticated attackers combine deepfake calls with compromised email accounts or spoofed email addresses. After the voice call, they send an email that appears to come from the executive's legitimate address. Finance staff see what looks like multi-channel verification and believe the request is genuine.

What Organizational Factors Increase Risk?

Several aspects of business culture and operations make organizations more vulnerable to deepfake attacks.

Authority gradients create pressure to comply with executive requests without extensive questioning. Finance staff understand organizational hierarchies. They feel reluctance to delay or challenge requests from the CFO, CEO, or board members. Attackers exploit this power dynamic by impersonating the highest-authority figures in the organization.

Urgency culture in finance operations normalizes time pressure. Deal closings require immediate wire transfers. Currency hedging decisions need split-second execution. Supplier payment deadlines create stress. When an executive calls requesting an urgent transfer, it fits established patterns. The request doesn't seem unusual because urgency is normal.

Remote work has eliminated natural verification touchpoints. Before distributed workforces became standard, an unusual payment request might trigger questions like "why aren't you in the office?" or "let's discuss this in person when you're back." Remote work removed these informal verification mechanisms. Digital-only communication is now completely normal.

Process documentation gaps leave employees without clear guidance. Many organizations lack documented protocols for handling unusual executive requests. Without specific steps to follow, individual employees make judgment calls under pressure. These ad-hoc decisions often favor speed over verification.

Training deficiencies compound the problem. Most cybersecurity awareness programs focus on email phishing and password security. Few programs specifically address deepfake threats or train finance staff to recognize voice and video manipulation tactics.

How Can Finance Teams Detect Deepfake Attacks?

What Are the Warning Signs?

Certain patterns should immediately trigger additional verification, regardless of how authentic the communication seems.

Request patterns that deviate from normal business processes deserve scrutiny. Unexpected urgency for large transfers without prior discussion raises red flags. Requests for confidentiality that exclude normal approval processes should trigger questions. Unusual payment destinations or recipient accounts need verification. Communication outside normal business hours seems suspicious. Any pressure to bypass standard verification procedures indicates potential fraud.

Technical indicators can sometimes reveal deepfakes, though human detection remains unreliable. Audio might have subtle unnatural qualities or robotic cadence. Video backgrounds could seem artificial or inconsistent. Facial movements might not perfectly match speech patterns. Lighting in video calls might look wrong. Audio that sounds "too perfect" without any background noise can indicate synthetic generation.

Behavioral inconsistencies provide valuable clues. The caller might not reference recent shared experiences or conversations. Responses to personal questions could seem generic or evasive. The speaking style might lack characteristic mannerisms or phrases the real executive uses regularly. Inability to recall specific details about ongoing projects should raise suspicion. Avoidance of topics the real executive would naturally discuss indicates something wrong.

What Questions Should Finance Staff Ask?

Training finance teams to ask verification questions can disrupt attacks before money moves.

Personal verification questions target specific recent knowledge that only the real executive would have. "What did we discuss in yesterday's finance meeting?" requires detailed recall of recent events. "Where are you calling from?" followed by specific location questions tests whether the caller actually knows where they claim to be. "Can you tell me the status of [specific current project]?" needs real-time knowledge.

The questions should demand specific, detailed answers rather than simple yes/no responses. Deepfake operators might have access to general information about the company but struggle with recent specific details or nuanced project status.

Process verification challenges communicate adherence to protocols. "Let me send you a verification code through our standard communication channel before processing this" tests whether the caller will accept normal security procedures. "This requires dual authorization, so I'm bringing [colleague name] into this conversation" adds verification layers. "I need to follow our standard verification protocol before I can proceed" establishes that security steps aren't optional.

"Let me call you back at your office number to confirm" is particularly effective, provided the finance staff actually calls the verified number from the company directory rather than a number provided by the caller.

What Prevention Strategies Should CFOs Implement?

How Do You Build Robust Verification Protocols?

Effective prevention requires systematic protocols that don't rely on human judgment to detect deepfakes.

Multi-channel verification should be mandatory for high-value or unusual transactions. Voice call requests must be verified through callback to a known phone number plus additional confirmation via email to a known address or in-person verification if possible. Video call requests need confirmation through separate channels like text messages to verified mobile numbers. Email requests require phone verification using known contact numbers.

The key is independence. Each verification channel must use contact information already stored in the organization's directory, never details provided in the suspicious communication. The channels must be genuinely separate, not just different methods that route through potentially compromised accounts.

Dual authorization requirements add critical protection. Two separate individuals must independently verify and authorize transactions exceeding defined thresholds. The second authorizer cannot rely on the first person's verification. They must follow the complete protocol independently. This prevents a single successful manipulation from compromising the transaction.

Challenge questions and shared secrets create verification methods that deepfakes cannot bypass. Establish rotating questions between executives and finance staff that reference recent specific events, ongoing projects, or personal details only genuine participants would know. Change these questions periodically to prevent compromise if an attacker observes one use.

Mandatory waiting periods remove the urgency that attackers rely on. Even with successful verification, require a minimum delay (30 minutes to 2 hours) before executing unusual high-value transfers. This cooling-off period allows time for additional verification and eliminates the effectiveness of pressure tactics.

What Technical Controls Provide Protection?

Technology should support, not replace, robust verification protocols.

Voice biometric systems analyze multiple vocal characteristics beyond simple voice recognition. These systems measure over 100 unique voice features including vocal tract shape, speaking speed, and micro-variations that current deepfakes struggle to replicate perfectly. While not foolproof, they add a technical verification layer.

Liveness detection technology requires real-time, unpredictable actions during video calls. The system might ask the person to hold up a specific number of fingers, read a randomly generated code, or make specific facial expressions. Pre-recorded or AI-generated content cannot respond to these spontaneous requests.

Digital watermarking adds tamper-resistant metadata to legitimate communications. This allows authentication of genuinely authorized requests while making unauthorized content identifiable. The watermarks must be established in advance through verified channels.

Encrypted communication channels create secure paths for financial authorizations. End-to-end encryption prevents interception and tampering. Establish these channels when everyone's identity is verified, then require their use for all financial communications. Messages received through other channels automatically trigger additional verification.

Transaction monitoring systems use AI to analyze patterns and identify unusual requests. These systems flag activities that deviate from established norms, providing an additional security layer that doesn't depend on human detection of deepfakes.

How Should Organizations Prepare Through Training?

Technology and protocols only work if people understand and follow them. This is where training becomes critical.

Organizations need to prepare teams for deepfake threats specifically. Generic cybersecurity awareness training doesn't adequately address voice cloning attacks, video deepfakes, and sophisticated impersonation tactics. Finance staff need to understand how convincing these attacks can be and what specific steps to take when verification fails.

Scenario-based training provides the most effective preparation. Employees need to experience realistic simulations of deepfake attacks in controlled environments before facing actual fraud attempts. This hands-on training helps them recognize manipulation tactics and builds confidence in following verification protocols under pressure.

Brightside AI's platform addresses this training gap with realistic attack simulations. The deepfake simulations prepare teams for sophisticated video and audio manipulation tactics by creating controlled experiences that feel authentic. Organizations can deploy these simulations and measure how employees respond, identifying who follows protocols and who needs additional training.

The training academy includes modules on deepfake identification, voice phishing recognition, and CEO fraud tactics. The chat-based format makes complex topics accessible, while achievement badges and gamification elements keep employees engaged. Most importantly, the training is designed specifically for AI-powered threats, using the same technologies attackers employ.

Regular testing maintains readiness. Conduct quarterly simulations using updated tactics as deepfake technology evolves. Review and adjust protocols based on emerging attack methods. Share information about attempted attacks with all finance staff to maintain awareness and reinforce the reality of the threat.

Create organizational culture where questioning authority for verification is expected and valued. Employees need explicit permission to follow verification protocols even when executives express impatience or pressure. Make it clear that security procedures are non-negotiable, and that employees who delay suspicious requests will be supported, not criticized.

How Brightside AI Protects Organizations from Deepfake Threats

The statistics are clear. Deepfake fraud attempts surged 3,000% in 2023. Voice deepfakes rose 680% in the past year. Average losses exceed $500,000 per incident. Organizations face millions in potential losses because security training and verification protocols weren't designed for threats where seeing and hearing are no longer reliable.

Traditional cybersecurity training teaches employees to spot phishing emails and create strong passwords. But how do you prepare a finance team for a phone call that sounds exactly like your CFO requesting an urgent wire transfer? How do you train them to question a video conference call where everyone looks and sounds authentic?

Brightside AI addresses this critical gap with comprehensive protection designed specifically for AI-powered threats.

The platform's deepfake simulations replicate actual attack scenarios that finance teams might face. These aren't generic exercises. They're realistic simulations that demonstrate exactly how convincing voice cloning and video deepfakes can be. Employees experience these attacks in controlled environments where mistakes become learning opportunities instead of million-dollar losses.

Organizations can deploy voice phishing simulations that use realistic AI-powered phone calls to test whether finance staff follow verification protocols when they receive suspicious authorization requests. The deepfake simulations prepare teams for sophisticated video and audio manipulation by showing them what these attacks actually look and sound like.

Most importantly, the platform measures responses. Organizations gain clear metrics showing which employees followed protocols, who authorized suspicious requests, and where additional training is needed. This data-driven approach identifies vulnerabilities before real attackers exploit them.

The interactive cybersecurity courses provides accessible education on critical topics including deepfake identification, voice phishing recognition, and CEO fraud tactics. The chat-based format with Brighty, the AI assistant, makes complex concepts understandable. Gamification elements including mini-games and achievement badges keep employees engaged with material that might otherwise seem dry.

The training continuously updates with emerging threats. As deepfake technology evolves and attackers develop new tactics, Brightside AI incorporates these into simulations and educational content. Organizations maintain current protection rather than relying on outdated training that doesn't reflect the real threat landscape.

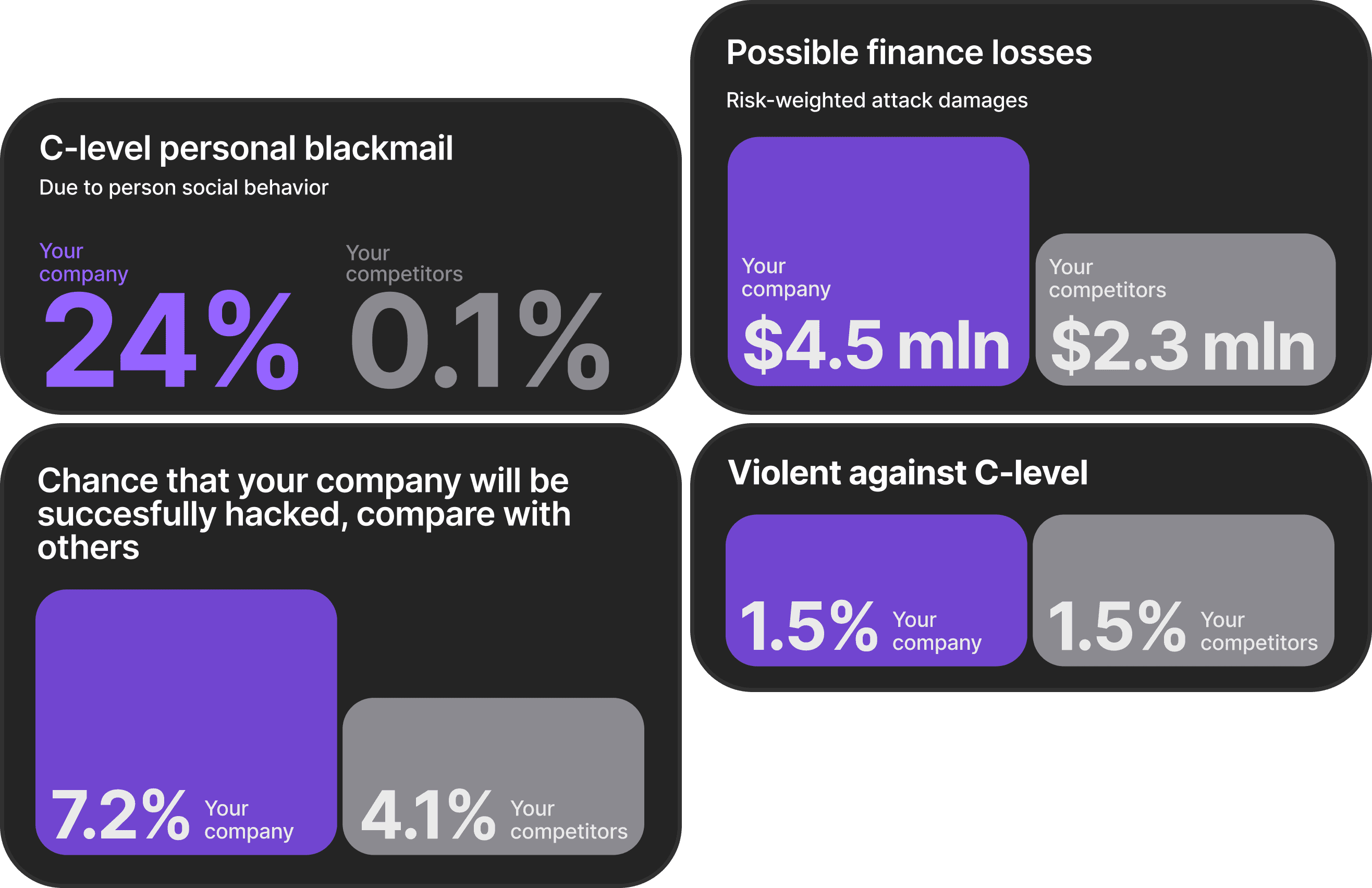

Beyond training, Brightside AI's OSINT scanning identifies which executives have extensive public media exposure that could be used for deepfake creation. CFOs who frequently appear in earnings calls, conference presentations, and media interviews have larger attack surfaces. Understanding this exposure allows organizations to implement stronger verification protocols for these high-risk individuals.

The B2B company portal provides enterprise-wide visibility into organizational security posture. Security teams can assign training, deploy simulations, track completion rates, and measure team readiness through centralized management. This comprehensive approach ensures consistent protection across the entire organization rather than relying on individual employee awareness.

Start your free risk assessment

Our OSINT engine will reveal what adversaries can discover and leverage for phishing attacks.

The Time to Act Is Now

Deepfake fraud represents one of the fastest-growing cybersecurity threats facing finance operations. Average losses exceed $500,000 per incident. Large enterprises lose an average of $680,000. Individual cases have resulted in losses ranging from $243,000 to $50 million.

The financial cost is only part of the damage. Organizations that fall victim to deepfake fraud face reputational harm, regulatory scrutiny, audit complications, insurance issues, and loss of stakeholder trust. The finance director who authorized that $499,000 transfer in Singapore followed what they believed were proper verification procedures. Yet the money still vanished.

The technology continues improving while becoming more accessible. What required sophisticated technical expertise and expensive equipment in 2019 can now be accomplished with free online tools and three seconds of audio. The barrier to entry for attackers keeps dropping while the quality of deepfakes keeps rising.

CFOs cannot afford to wait. Every quarterly earnings call, every conference presentation, and every media interview creates more training data for potential attackers. The public presence that finance leadership roles require simultaneously creates the vulnerability that deepfake fraud exploits.

What Should CFOs Do Immediately?

Start this week by reviewing current payment authorization protocols. Document every step in the workflow. Identify where voice or video verification serves as the primary security control. These are your greatest vulnerabilities. Implement mandatory callback requirements using only verified contact numbers stored in company directories. Never use contact information provided in the suspicious request itself.

Establish dual authorization requirements for transactions exceeding defined thresholds. Two separate individuals must independently verify and authorize using the complete protocol. This prevents a single successful manipulation from compromising the transaction.

Schedule a deepfake threat briefing with finance leadership. Ensure every member of the team understands what these attacks look like, how convincing they can be, and why traditional verification methods have become inadequate. Share the Singapore case, the Hong Kong case, and the UK energy company case. Make the threat real and immediate.

This month, conduct a comprehensive risk assessment of finance operations. Map every process where executives can request urgent payments or wire transfers. Identify all the verification steps currently in place and evaluate whether they would stop a sophisticated deepfake attack. Be honest about gaps where urgency culture or authority gradients might override security procedures.

Implement multi-channel verification protocols that require independent confirmation through at least two separate communication channels. Never allow a single method, even video calls, to serve as sufficient verification for high-value or unusual transactions.

Establish challenge questions with executives. Create shared secrets or rotating verification questions that reference recent specific events, ongoing projects, or personal details only genuine participants would know. Change these periodically and ensure everyone understands their critical role in preventing fraud.

This quarter, deploy realistic deepfake attack simulations for all finance staff. Generic cybersecurity training isn't sufficient. Teams need hands-on experience with actual deepfake scenarios. Brightside AI's platform provides these simulations, allowing employees to experience convincing attacks in controlled environments before facing real fraud attempts.

Track results and measure readiness. Identify which employees follow protocols and which need additional training. Use objective metrics to demonstrate to boards and auditors that the organization is actively preparing for this emerging threat.

Build organizational culture where questioning authority for verification is expected, not discouraged. Finance staff need explicit permission to delay requests from executives until verification is complete. Make it clear that employees who follow security protocols will be supported, even when executives express impatience or pressure.

The next suspicious phone call your finance team receives might be the one that costs $500,000. Will they recognize it? Will they follow verification protocols? Will they resist the urgency and authority pressure that makes these attacks successful?

Take action today. Deepfake fraud isn't a future threat. It's happening right now to organizations just like yours. The question is whether you'll prepare your team before or after the attack that makes your company the next cautionary tale in someone else's security presentation.