Back to blog

How to Defend Against Deepfake Attacks: 2025 Guide

Written by

Brightside Team

Published on

Oct 14, 2025

The world woke up to a harsh reality in early 2025. In just the first three months of the year, 179 deepfake incidents were reported, a number that surpassed the entire 2024 total by 19%. This is not a warning about future threats. This is happening right now, and the scale is staggering.

Deepfakes are no longer experimental technology reserved for nation-state actors or elite hacking groups. They have become mainstream cybercrime tools, accessible to anyone with basic technical skills and a few dollars. The consequences are severe. Financial losses from deepfake fraud reached $410 million in just the first half of 2025, compared to $359 million for all of 2024. To put this in perspective, the losses in six months exceeded the previous year by 14%.

Before diving deeper, let's clarify what we mean. Deepfakes are synthetic media created using artificial intelligence to generate fake videos, audio recordings, or images that look and sound convincingly real. They can make it appear as though someone said or did something they never actually did. Voice phishing, or vishing, is a social engineering attack where criminals use phone calls to trick victims into revealing sensitive information or transferring money. When these technologies combine with AI-powered voice cloning, the result is a threat that exploits our most basic human instinct: trust.

Why Are Deepfake Attacks Surging So Rapidly?

The numbers tell a disturbing story. Deepfake files have exploded from 500,000 in 2023 to a projected 8 million in 2025, a 1,600% increase in just two years. This growth is not simply about more attacks. It reflects a fundamental shift in how these attacks are executed.

Real-time deepfake manipulation has arrived. Attackers can now generate convincing video and audio during live conversations. This represents a complete transformation from static, pre-recorded content to dynamic, interactive deception that responds naturally to questions and adapts to conversation flow. Voice phishing attacks using AI-generated voices increased by 442% in late 2024, and the technology has become so sophisticated that even trained security professionals struggle to identify fake voices.

The tools themselves have become frighteningly accessible. Attackers now use platforms like Xanthorox AI that automate both voice cloning and live call delivery, removing the need for technical expertise or lengthy preparation. These tools integrate seamlessly with enterprise systems like Microsoft Teams, Zoom, and traditional phone networks, allowing criminals to impersonate colleagues and blend perfectly into normal business workflows.

Modern attacks follow a sophisticated multi-channel approach. A typical deepfake fraud campaign begins with an email from what appears to be a trusted executive, followed by a video call where AI-generated avatars deliver urgent requests for financial transfers or system access. The sophistication lies in the orchestration, combining multiple communication channels to build credibility and bypass single-point verification measures.

What Financial Impact Are Organizations Actually Facing?

The financial consequences vary dramatically by geography and sector, but all the numbers point in the same troubling direction. Mexico leads globally with average losses of $627,000 per deepfake incident, followed by Singapore at $577,000 and the United States at $438,000. These regional differences reflect varying levels of organizational preparedness, regulatory frameworks, and cultural factors affecting how employees verify unusual requests.

Financial services organizations face the highest risk, experiencing average losses of $603,000 per incident—34% higher than the general business average of $450,000. This sector has become the primary target because of the direct access to funds and the legitimate need for quick transaction processing. In fact, 53% of financial professionals reported being targeted by deepfake scams in 2024 alone.

The cryptocurrency sector has been devastated. Deepfake-related incidents in crypto platforms surged 654% from 2023 to 2024. Crypto platforms now see the highest rate of fraudulent activity attempts, which have risen 50% year-over-year, from 6.4% in 2023 to 9.5% in 2024.

Looking ahead, the projections are even more alarming. Deloitte forecasts that generative AI fraud will reach $40 billion by 2027 in the United States alone. Contact center fraud, heavily leveraging voice deepfakes, is projected to hit $44.5 billion globally in 2025. These are not abstract future scenarios; they are extrapolations based on current growth trajectories that show no signs of slowing.

Perhaps most concerning is the confidence gap. While 56% of businesses claim confidence in their deepfake detection abilities, only 6% have actually avoided financial losses from these attacks. This massive disconnect between perception and reality indicates widespread overconfidence that leaves organizations vulnerable.

Can Humans Actually Detect Deepfakes?

The short answer is no, not reliably. Human detection accuracy hovers at just 55-60%—barely better than random chance. Think about that for a moment. Asking employees to visually or audibly identify deepfakes is essentially asking them to flip a coin.

Research shows that only 0.1% of the global population can reliably detect deepfakes, despite 71% being aware of their existence. This massive awareness-to-capability gap reveals a fundamental problem with awareness-based defense strategies. Knowing that deepfakes exist does not translate into the ability to identify them in real-world, high-pressure situations.

The situation becomes even more complex when we examine training effectiveness. Studies indicate that feedback-based deepfake detection training can improve accuracy by around 20%. That sounds positive until you consider the psychological costs. Research by Dr. Andreas Diel found that while training improved detection accuracy, it also increased emotional arousal, decreased emotional valence, and increased anxiety toward AI misuse. Participants became more accurate but also more stressed, less confident, and more anxious about technology.

One journalist study revealed that professionals who relied on deepfake detection tools sometimes overrelied on them, creating false confidence that actually weakened their overall verification practices. The tools "often contributed to doubt and confusion" when results contradicted other verification methods. This suggests that technological solutions alone may create as many problems as they solve.

How Well Do Current Detection Technologies Work?

Unfortunately, not well enough. Automated detection systems experience 45-50% accuracy drops when confronted with real-world deepfakes compared to controlled laboratory conditions. This performance degradation occurs because real-world attacks use techniques and tools that differ from the training data used to build detection models.

Research conducted by CSIRO found that leading detection tools collapsed to below 50% accuracy when confronted with deepfakes produced by tools they were not trained on. This creates a dangerous pattern where detection capabilities consistently lag behind generation technologies. Each time a new generation technique emerges, detection models must quickly adapt, and the cycle repeats itself.

The fundamental challenge is what researchers call the "generalization problem." Detection systems trained on specific types of deepfakes fail when confronted with novel techniques or demographically different targets. Research by Stroebel et al. identified that deepfake detection techniques trained on traditional datasets showed strong bias toward lighter skin tones and greater success with older age groups. This bias creates vulnerabilities that sophisticated attackers can exploit through demographic targeting.

Reality Defender's analysis emphasizes the arms race dynamic: "Every time a more advanced algorithm emerges, detection models must quickly adapt, and the cycle repeats itself." This forces continuous investment in research and development that may divert resources from other critical security priorities without ever achieving reliable detection.

What Do Cybersecurity Leaders Actually Recommend?

Rob Greig, Chief Information Officer at Arup, whose organization suffered a $25 million deepfake fraud, emphasizes the psychological dimension: "Audio and visual cues are very important to us as humans, and these technologies are playing on that. We really do have to start questioning what we see." This perspective highlights how deepfakes exploit fundamental human trust mechanisms rather than technical vulnerabilities.

Leonard Rosenthol from Adobe emphasizes establishing digital content provenance: "We need more platforms where users access their content to display this information... it's essential to know: 'Can I trust this?'" This suggests moving beyond detection toward verification frameworks that authenticate content origin rather than trying to identify forgeries.

Security researchers increasingly advocate for "trust but verify" protocols that assume all audio-visual communication could be compromised. This represents a fundamental shift from detection-based security models to verification-based models. Rather than training employees to identify deepfakes, organizations should implement processes that verify identity through independent channels regardless of how authentic the communication appears.

The G7 Cyber Expert Group acknowledges the dual-use challenge: while AI can enhance cyber resilience through anomaly detection and fraud prevention, it simultaneously enables "hyper-personalized phishing messages and deepfakes, complicating detection efforts." This dual-use nature creates complex strategic challenges that require balancing innovation benefits against security risks.

Which Industries Face the Greatest Risk?

Financial services remains the primary target, but the threat landscape has expanded dramatically. Healthcare organizations face unique vulnerabilities through patient impersonation and medical record manipulation. Manufacturing and critical infrastructure have emerged as high-priority targets, with attackers using deepfakes to impersonate executives for supply chain manipulation and operational disruption.

In January 2024, a finance employee at multinational engineering firm Arup was manipulated into wiring $25.6 million to fraudsters after joining a video call with individuals who looked and sounded like the company's CFO and several colleagues. The employee initially suspected phishing when he received the email request, but felt reassured after the video call. He made 15 transfers to five separate bank accounts before realizing every person on the video call was an AI-generated fraud.

Financial services organizations report that 26% of executives surveyed experienced deepfake-powered fraud targeting their financial or accounting departments in just one year. This has led to fundamental changes in verification protocols, with many institutions implementing mandatory time delays for high-value transactions and multi-channel verification requirements.

The insurance sector has been particularly hard hit by synthetic voice fraud, which increased 475% in 2024. Energy companies and banks face coordinated campaigns where attackers, posing as internal IT support, harvest credentials that are later used to launch ransomware attacks on critical systems.

What Role Does OSINT Play in Deepfake Attacks?

Open-source intelligence gathering forms the foundation of sophisticated deepfake attacks. Attackers leverage publicly available information from social media, corporate websites, and professional platforms to build convincing impersonation profiles. They analyze video and audio files from online conferences, virtual meetings, and public appearances to train AI models on specific voices and appearances.

The Hong Kong police investigation into the Arup fraud revealed that perpetrators developed AI-generated deepfakes of the finance worker's CFO and colleagues by leveraging existing video and audio files from online conferences and virtual company meetings. This demonstrates how routine business communications create the raw material for future attacks.

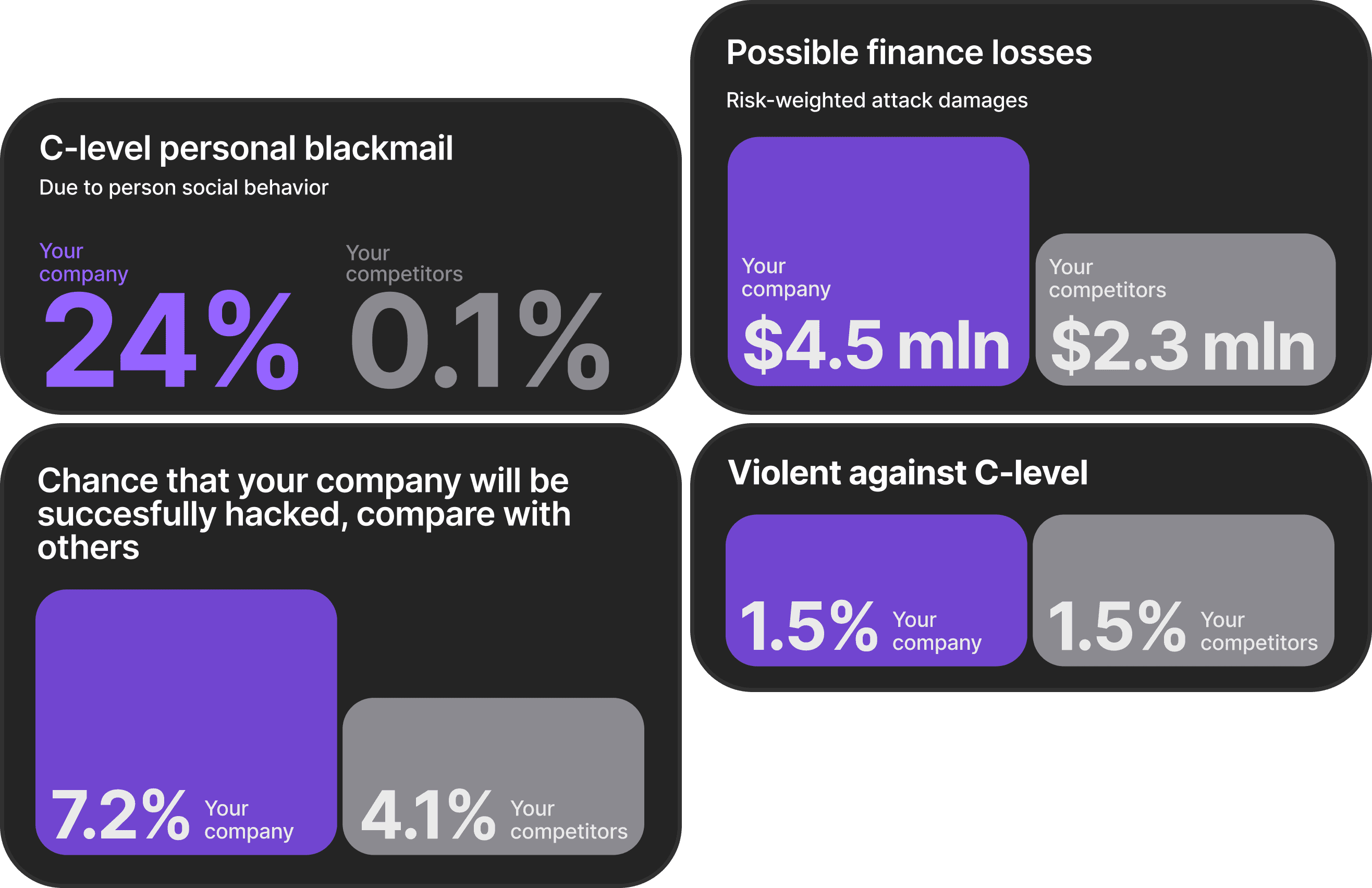

Understanding what information about employees is publicly available has become a critical security priority. Organizations need visibility into their digital footprint across six key categories: personal information, data leaks, online services, personal interests, social connections, and location data. Each exposed data point increases the attack surface and provides attackers with more material to create convincing impersonation attempts.

This is where proactive OSINT scanning becomes essential. Rather than waiting for attackers to exploit publicly available information, organizations can map their employees' digital presence and identify vulnerable data before criminals do. This approach shifts security from reactive detection to proactive risk reduction.

How Can Organizations Build Effective Defenses?

The evidence overwhelmingly supports multi-layered defense approaches that combine technological capabilities with robust verification protocols and comprehensive employee training. However, simple solutions are insufficient for the complexity of this challenge.

First, organizations must implement out-of-band verification protocols for any unusual requests involving money transfers, credential sharing, or sensitive information access. This means using a different communication channel than the one where the request originated. If someone calls requesting a wire transfer, verify through email. If someone emails requesting credentials, verify through a phone call to a known number. This simple protocol defeats most deepfake attacks because attackers typically control only one communication channel.

Second, organizations need visibility into what information about their employees is publicly accessible. Attackers use OSINT to research targets before launching personalized deepfake campaigns. By understanding what data is exposed, organizations can guide employees to reduce their attack surface and make impersonation attempts less convincing.

Third, realistic simulation training is crucial. Analysis of 386,000 malicious emails revealed that while only 0.7-4.7% were AI-generated, these attacks achieved significantly higher success rates than traditional phishing. AI-generated phishing emails have a 54% click-through rate compared to 12% for human-written content. Employees need exposure to the actual techniques they will face, not generic awareness presentations.

This is where platforms like Brightside AI provide critical value. Their approach combines OSINT-powered digital footprint scanning with realistic attack simulations across multiple vectors: email phishing, voice phishing, and deepfake scenarios. Rather than generic training, Brightside AI uses real OSINT data about employees to create personalized simulations that reflect the actual information attackers would use.

The platform's simulation capabilities cover all modern attack vectors. Email phishing simulations use pre-made templates organized by attack type and employee role, plus AI-generated spear phishing that leverages actual OSINT data for maximum realism. Voice phishing simulations deliver realistic AI-powered phone calls that train employees to recognize voice-based social engineering. Deepfake simulations prepare teams for sophisticated video and audio manipulation tactics.

What makes this approach effective is the personalization. Simulations tailored to each individual's actual online risks create more realistic training experiences that better prepare employees for real attacks. The platform also provides administrators with visibility into organizational security posture through vulnerability scoring, exposure metrics, and progress tracking, all while maintaining privacy by showing aggregate data rather than personal details.

What About Multi-Channel Attack Orchestration?

Attackers have evolved beyond single-vector approaches to create comprehensive multi-channel campaigns. A typical modern attack begins with initial email contact, progresses to a video call using platforms like Teams or Zoom where AI-generated avatars deliver urgent requests, and often includes follow-up communications designed to overcome hesitation.

Defending against multi-channel attacks requires multi-channel verification. Organizations should implement protocols that require confirmation through at least two independent channels for high-risk actions. For financial transactions above certain thresholds, this might mean email request, video call discussion, and callback verification to a known phone number before execution.

Time delays provide another effective defense. Many deepfake attacks exploit urgency to bypass normal verification procedures. Implementing mandatory waiting periods for high-value transactions, even just 15-30 minutes, allows employees to step back, consult with colleagues, and verify through alternative channels without the pressure of immediate action.

Role-based access controls limit the damage from successful attacks. If employees only have access to systems and information necessary for their specific roles, a successful impersonation attack yields less value to attackers. This follows the principle of least privilege, where each user has only the minimum access required to perform their job.

Should Organizations Focus on Detection or Process?

A fundamental debate exists within the cybersecurity community. Detection advocates argue that improving AI capabilities for identifying synthetic media remains the primary defense strategy. Process advocates contend that detection systems consistently fail when confronted with novel generation techniques, making verification protocols that assume all media could be compromised the only viable approach.

The data supports the process advocates. Detection accuracy drops dramatically in real-world conditions, and the arms race between generation and detection consistently favors attackers. Rather than investing heavily in detection technologies that may become obsolete within months, organizations should build processes that function independently of detection capabilities.

This means moving from "detect and block" to "verify and confirm." Rather than training employees to identify deepfakes, train them to verify identity through independent channels regardless of how authentic the communication appears. Rather than relying on AI tools to flag suspicious content, implement workflows that require multi-channel verification for sensitive actions.

This approach acknowledges a hard truth: as generation technology continues improving, detection will become increasingly unreliable. Building security processes that function even when detection fails creates more resilient defenses.

How Can Employees Manage Their Personal Digital Footprint?

Individual digital hygiene plays a crucial role in organizational security. Every piece of publicly available information about employees provides raw material for attackers building impersonation profiles. Organizations that empower employees to manage their personal privacy reduce the entire organization's attack surface.

Employees need visibility into what information about them is publicly accessible across multiple categories: email addresses, phone numbers, home addresses, compromised passwords, online service accounts, social connections, and location data. Without this visibility, employees cannot make informed decisions about their digital presence.

Guided remediation makes privacy management actionable. When employees discover exposed data, they need step-by-step instructions for securing it, not just awareness that it exists. This includes practical guidance on adjusting privacy settings, using email aliases, removing information from data brokers, and understanding why specific actions matter.

Brightside AI's approach addresses this through their employee portal, which provides personal risk level assessment, clear insights into exposed information, and guided data protection through an assistant that delivers category-specific remediation guides. This empowers employees to directly control their privacy while reducing corporate vulnerability.

The platform's OSINT-powered technology maps users' complete digital presence across six key categories, identifying vulnerable data before attackers exploit it. The system also calculates a personal safety score based on the number and types of exposed data points, relevance to selected safety goals, and attack surface combinations. This gives both employees and organizations clear metrics for measuring and improving security posture.

Top 5 Deepfake Awareness Training Platforms

Modern deepfake phishing and AI-powered social engineering attacks are reshaping the cybersecurity landscape, making specialized awareness training platforms more important than ever. Below are the top five deepfake awareness training platforms for 2025: Brightside AI, Adaptive Security, Jericho Security, Hoxhunt, and Revel8. Each platform brings innovative features, realistic simulations, and industry-specific strengths to help organizations of all sizes keep their people cyber-aware and secure.

Brightside AI

Brightside AI stands out with a Swiss-built, privacy-first security platform designed to safeguard both organizations and individual employees. Leveraging advanced open-source intelligence (OSINT), Brightside maps digital footprints to identify personal and corporate vulnerabilities, reducing the risk of successful deepfake and phishing attacks. The training modules cover email, voice, and video threats with AI-driven simulations that are tailored to each employee's real exposure. Admin dashboards offer extensive vulnerability metrics, completion tracking, and privacy management tools. Gamified learning experiences (including mini-games and achievement badges) make security training memorable, while users benefit from personalized privacy action plans guided by Brighty's AI chatbot. Notably, Brightside's hybrid approach strengthens both personal privacy and corporate security, which is especially impactful for sectors handling sensitive personal data.[^1]

Pros:

Comprehensive OSINT mapping identifies real vulnerabilities across six data categories before attackers can exploit them

Dual-purpose platform protects both corporate infrastructure and individual employee privacy

Gamified training with leaderboards and mini-games drives engagement and retention

Privacy-first Swiss approach with GDPR compliance built in

Personalized AI coaching with Brighty chatbot provides ongoing privacy guidance

Cons:

Smaller market presence compared to established competitors

Limited third-party integrations compared to enterprise-focused platforms

May require cultural adaptation for organizations not prioritizing individual privacy management

Adaptive Security

Adaptive Security leads the field in multichannel deepfake and AI-powered phishing simulations. With extensive coverage across email, voice, video, and SMS, Adaptive Security runs simulations built on open-source data, mimicking exactly how attackers operate in the wild. Its conversational red teaming agents offer hyper-realistic, ever-changing scenarios matched to a company's evolving digital footprint. Adaptive Security distinguishes itself with seamless integration (100+ SaaS connectors), instant onboarding for employees, and board-ready reporting to demonstrate progress and ROI to executives. The platform is particularly strong for large, regulated organizations that need proof of compliance and smart risk assessment alongside agile, multi-vector training coverage.[^2][^3]

Pros:

Multi-channel simulations across email, voice, video, and SMS provide comprehensive coverage

Executive deepfakes and company OSINT create highly personalized, realistic training scenarios

100+ SaaS integrations enable instant provisioning and seamless deployment

Board-ready reporting and compliance dashboards simplify executive communication

AI content creator allows rapid customization of training modules

Cons:

Premium pricing may be prohibitive for smaller organizations

Limited email customization options for HR-branded communications

Complexity of features may require dedicated admin resources for optimization

Screenshot of Adaptive Security's platform highlighting AI-powered deepfake phishing simulations and role-based training features.

Jericho Security

Jericho Security delivers unified, AI-driven simulations and defense, bridging the gap between training, threat detection, and automated remediation. Its platform allows enterprise security teams to run dynamic, hyper-personalized phishing simulations across all channels: email, voice, video, and SMS. By using real attacker techniques, including open-source intelligence and behavioral profiling, Jericho Security provides realistic, adaptive training that responds to both user and threat environment changes. The system ties simulation outcomes directly to role-based dashboards and "human firewall" scores, so organizations always know where their risk is highest. Highly suitable for highly regulated and compliance-heavy industries, Jericho's fully adaptive approach stands out for linking learning, simulation, and detection in a smart loop, building organizational resilience from the inside out.

Pros:

Generative AI creates hyper-personalized spear phishing simulations instead of templates

Unified platform integrates training, threat detection, and autonomous remediation

24-hour custom content turnaround for urgent or time-sensitive threats

Native multilingual support with one-click deployment for global organizations

Cons:

Newer market entrant with less established track record than legacy vendors

May have steeper learning curve for teams accustomed to traditional template-based tools

Premium feature set may exceed requirements for smaller organizations

Hoxhunt

Hoxhunt is recognized for its highly engaging, gamified approach, transforming phishing and deepfake detection into a continuous learning challenge. Employees encounter realistic deepfake simulations that recreate multi-step attacks, such as CEO voice impersonation during a simulated Microsoft Teams video call. Hoxhunt's platform is unique for its consent-first, responsible simulations: every scenario is pre-scripted, brief, and accompanied by instant micro-training if a risky action is taken, keeping learning stress-free and effective. The system includes detailed leaderboards, achievements, and progress metrics designed to keep large, distributed workforces motivated and alert. Real-time campaign analytics give administrators granular visibility into user behavior, with automated follow-up training for those at risk. Hoxhunt is the platform of choice for organizations wanting adaptive, ethical, and measurable behavioral change across all levels.

Pros:

Real-time, user-level feedback after every action builds lasting security reflexes

Micro-training delivered immediately after simulations creates strong retention

Gamification with leaderboards and achievements drives sustained engagement

Personalized training adapts to role, language, performance, and risk profile

Reduces admin burden through automation and adaptive content delivery

Cons:

Micro-training lacks in-depth dive options for users seeking comprehensive education

No visibility into pass/fail rates across user populations within simulations

May require dedicated resources for custom integration workflows in complex environments

Gamified cybersecurity awareness training dashboard from Hoxhunt showing user progress, leaderboard status, streaks, and achievements.

Revel8

Revel8 leverages cutting-edge AI to train employees against the full spectrum of modern cyber threats, with a special focus on deepfakes, voice cloning, and multi-channel attacks. The platform uniquely combines OSINT-driven personalization (adapting simulated attacks based on public company data) with real-time human threat intelligence for up-to-date, relevant scenario design. Gamification is deeply embedded, featuring real-time leaderboards, interactive modules, and points to encourage ongoing participation and learning. Revel8's dashboard includes compliance benchmarking and a proprietary "Human Firewall Index" that quantifies organizational awareness and tracks progress against standards like NIS2 and ISO 27001. This makes it ideal for compliance-driven organizations seeking granular risk insights and measurable improvement.

Pros:

OSINT-driven personalization creates realistic, targeted attack scenarios based on actual company data

Real-time threat intelligence keeps training content current with emerging attack vectors

Human Firewall Index provides quantifiable security culture metrics

GenAI simulation playlists deliver adaptive, multi-channel training experiences

Cons:

Limited market visibility and customer review availability compared to established platforms

German market focus may limit language and regional customization for global enterprises

Smaller integration ecosystem compared to enterprise-focused competitors

Platform | Deepfake Simulation Channels | Gamification | Key Differentiator | Best For |

|---|---|---|---|---|

Brightside AI | Email, Voice, Video | Interactive mini-games, leaderboards | OSINT mapping + integrated privacy management | Privacy-focused organizations, SMB to Enterprise |

Adaptive Security | Email, Voice, Video, SMS | Yes | Multi-channel AI emulation + compliance reporting | Mid-market to Enterprise, regulated sectors |

Jericho Security | Email, Voice, Video, SMS | Human risk scoring | Unified adaptive platform for simulation, detection, remediation | Enterprise, highly regulated industries |

Hoxhunt | Email, Voice, Video | Full platform gamification, leaderboards, badges | Gamified adaptive training + consent-first realism | Mid-market to Enterprise, behavioral change focus |

Revel8 | Email, Voice, Video | Leaderboards, interactive modules, points | Personalized OSINT risk + live threat intel | Compliance-driven SMB to Enterprise |

Start your free risk assessment

Our OSINT engine will reveal what adversaries can discover and leverage for phishing attacks.

What Regulatory Changes Are Coming?

The regulatory landscape remains fragmented and inconsistent. The proposed ten-year federal moratorium on state and local AI regulation has sparked intense controversy. Proponents argue it prevents a "patchwork of inconsistent laws" and fosters uniform federal oversight, while critics contend it would prohibit states and local jurisdictions from passing laws that govern AI models precisely when deepfake incidents are surging by 245% year-over-year.

The UN's International Telecommunication Union has called for businesses to employ "sophisticated technologies to identify and eliminate misinformation and deepfake content," but implementation remains inconsistent globally. Cross-border enforcement presents particular challenges when creation, distribution, and impact occur across different jurisdictions.

In the UK, the Financial Conduct Authority has issued alerts linking deepfake scams to fraud and emphasizing the need for layered verification. This regulatory guidance recognizes that detection alone is insufficient and that procedural controls form the foundation of effective defense.

Organizations should not wait for regulatory clarity before implementing defenses. The threat is current, the losses are substantial, and the trajectory points toward escalation. Proactive organizations that implement comprehensive defenses now will be better positioned regardless of how regulations evolve.

What Does the Future Hold?

The trend lines all point in the same direction. Deepfake technology will continue improving, attacks will become more sophisticated, and detection will struggle to keep pace. Organizations that successfully navigate this challenge will be those that treat deepfake defense as an ongoing, adaptive process rather than a one-time implementation project.

Multimodal detection systems that combine voice, visual, and behavioral analysis represent the most promising near-term technological direction. These systems detect deepfakes by identifying inconsistencies across multiple biometric channels that are difficult to synchronize in synthetic media. However, even these advanced approaches face the fundamental challenge of the detection-generation arms race.

Blockchain authentication frameworks for digital content provenance offer potential long-term solutions, though current implementations face scalability and user adoption challenges. Research into lightweight, user-friendly blockchain integration could yield practical authentication systems, but this remains years away from mainstream deployment.

The most immediate practical defenses combine OSINT-driven risk reduction with realistic simulation training and robust verification protocols. Organizations need visibility into their attack surface through comprehensive digital footprint scanning. They need employees prepared for actual attack techniques through personalized simulations that reflect real-world tactics. And they need processes that verify identity and authorization through multiple independent channels regardless of how authentic any single communication appears.

What Actions Should Organizations Take Now?

The deepfake threat in 2025 demands immediate, comprehensive response strategies. With 179 incidents in Q1 2025 alone surpassing all of 2024, organizations cannot treat deepfakes as an emerging threat but must recognize them as a current, active danger.

Start with visibility. Conduct comprehensive digital footprint assessments to understand what information about key personnel is publicly accessible. This OSINT-driven approach identifies vulnerabilities before attackers exploit them.

Implement verification protocols. Establish clear procedures requiring out-of-band verification for high-risk actions like wire transfers, credential sharing, and system access changes. Make these protocols mandatory, not optional.

Deploy realistic training. Move beyond generic awareness presentations to simulation-based training that exposes employees to actual attack techniques they will face. Personalized simulations that leverage real OSINT data create more effective learning experiences.

Establish multi-channel verification requirements. For sensitive actions, require confirmation through at least two independent communication channels. This simple protocol defeats most deepfake attacks because criminals typically control only one channel.

Build time delays into high-risk processes. Mandatory waiting periods allow employees to verify unusual requests without the pressure of immediate action. Even 15-30 minute delays significantly reduce successful attacks.

The future of deepfake defense lies not in choosing between human training and technological solutions, but in developing integrated approaches that leverage the strengths of both while acknowledging their limitations. Organizations that successfully navigate this challenge will be those that implement layered defenses combining OSINT-driven risk reduction, realistic simulation training, robust verification protocols, and continuous adaptation to evolving threats.

The question is not whether your organization will face deepfake attacks, but whether you will be prepared when they arrive.