Back to blog

Spear Phishing Training: AI Defense Strategies 2025

Written by

Brightside Team

Published on

Oct 23, 2025

Last month, a finance director at a mid-sized tech company received an email from her CEO asking for an urgent wire transfer. The message included details about a confidential acquisition, referenced a conversation from their last board meeting, and even matched the CEO's writing style. She clicked the link and entered her credentials. Within hours, attackers had accessed sensitive financial systems. The email wasn't written by a careless criminal making obvious mistakes. It was crafted by artificial intelligence.

This isn't science fiction. It's happening right now, and while still relatively rare, it's evolving rapidly.

Let's talk about what spear phishing actually means. Unlike regular phishing (those obvious "Your account has been suspended!" emails we all ignore), spear phishing targets specific people with personalized messages. Think of regular phishing as throwing a net into the ocean hoping to catch something. Spear phishing is more like fishing with a custom lure designed specifically for the fish you want to catch.

Now add AI to the mix. Artificial intelligence can:

Research targets using publicly available information

Write convincing emails in any language

Remove grammatical errors that used to give away phishing attempts

Create thousands of unique versions in minutes

It's like giving attackers a team of expert researchers and writers who never sleep.

Here's a term you'll see throughout this article: OSINT, which stands for Open Source Intelligence. This means gathering information from public sources like social media, company websites, news articles, and data breaches. Attackers use OSINT to learn everything about you before they strike. Your LinkedIn profile, your Twitter posts, that conference you mentioned on Instagram? All of it becomes ammunition.

The numbers paint a concerning picture. IBM's 2024 Cost of a Data Breach Report found that companies experiencing data breaches (from all causes, not just phishing) lose an average of $4.88 million, with phishing-specific breaches costing around $4.8 million. But here's what matters most: we can do something about it through a combination of technical defenses and human awareness.

What Makes AI-Generated Phishing More Dangerous Than Traditional Attacks?

Remember when you could spot phishing emails by their terrible grammar and weird spacing? Those days are fading for sophisticated attacks.

AI removes many traditional warning signs. No more "Dear Valued Customer" messages filled with spelling mistakes. Instead, attackers feed information about you into AI systems that generate messages that sound exactly like your coworkers, your boss, or your vendors. These emails reference real projects you're working on, use the same phrases your team uses, and arrive at times when you'd expect them.

Here's what makes this particularly dangerous. AI can create personalized attacks at massive scale. A human attacker might send 50 carefully crafted emails per day. AI can send 50,000. Each one unique. Each one targeting a specific person with information gathered about them.

The attacks go beyond email now. AI voice cloning technology has advanced significantly, though creating truly convincing voice clones still requires more resources than often portrayed. While some services claim to clone voices in seconds, producing high-quality clones that can fool victims in high-stakes situations typically requires 10-30 seconds of clear audio for basic quality, with professional-grade clones needing 3+ minutes of audio data. Still, this presents a real threat: imagine receiving a voicemail from your CEO asking you to call back about an urgent matter. You call, someone who sounds like your CEO answers, and they walk you through "fixing" a problem that requires you to disable security settings.

Some attackers are even using deepfake video for high-value targets, though these attacks require substantial technical resources and preparation. The often-cited case of a finance team in Hong Kong losing $25 million after a video call with what appeared to be their CFO demonstrates both the potential impact and the sophistication required—attackers created deepfakes of multiple executives and orchestrated an elaborate, resource-intensive operation.

How Effective Are AI-Powered Spear Phishing Attacks Compared to Human-Crafted Ones?

Research data from controlled experiments reveals interesting patterns, though it's important to note these come from academic studies with relatively small sample sizes rather than large-scale organizational deployments:

Generic phishing emails get clicked by approximately 12% to 18% of recipients in experimental settings

Expert human-crafted spear phishing emails achieve around 54% click rates in controlled studies

AI-generated spear phishing reaches similar 54% success rates in the same experimental conditions

AI combined with human oversight climbs to 56% in these controlled environments

These figures come from academic research involving participants ranging from 101 to several hundred individuals in controlled settings, not from broad organizational field studies. Real-world organizational data shows considerable variation depending on industry, training levels, and existing security posture.

Here's the trend that should interest security leaders: AI capabilities are improving. Research from Hoxhunt, based on their platform's experiments with approximately 70,000 simulations, shows AI-generated phishing has progressed from being 31% less effective than human-crafted attacks in 2023 to becoming 24% more effective by March 2025. However, the methodology evolved significantly over this period—from simple ChatGPT prompting in 2023 to sophisticated AI agents in 2025—making direct comparisons more complex.

Following ChatGPT's release in late 2022, security firm SlashNext reported a 1,265% increase in malicious phishing messages detected between Q4 2022 and Q3 2023—a dramatic surge in the roughly nine-month period following the tool's public availability. This measured total phishing volume increase rather than specifically AI-written attacks, but it highlighted how accessible AI tools accelerated threat actor capabilities.

What's the Real Scale of the AI Phishing Threat?

Here's the nuance that matters: while AI-powered phishing represents a genuine and growing threat, we need to maintain perspective on its current prevalence. In 2024, definitively AI-written phishing emails represented only 0.7% to 4.7% of phishing messages that successfully bypassed email filters. The vast majority of successful phishing attacks still use traditional techniques.

This doesn't mean we should dismiss the threat. Rather, we should understand its current trajectory:

Why AI phishing remains relatively rare:

Basic phishing already works effectively, so attackers lack incentive to add complexity

AI-powered attacks require more technical sophistication to deploy

Many phishing operations succeed with volume rather than personalization

Why AI phishing is growing:

AI tools are becoming more accessible and user-friendly

Attackers are increasingly targeting high-value individuals where personalization justifies effort

The sophistication gap between AI and human-crafted attacks has closed

Percentage growth rates are significant even if absolute numbers remain small

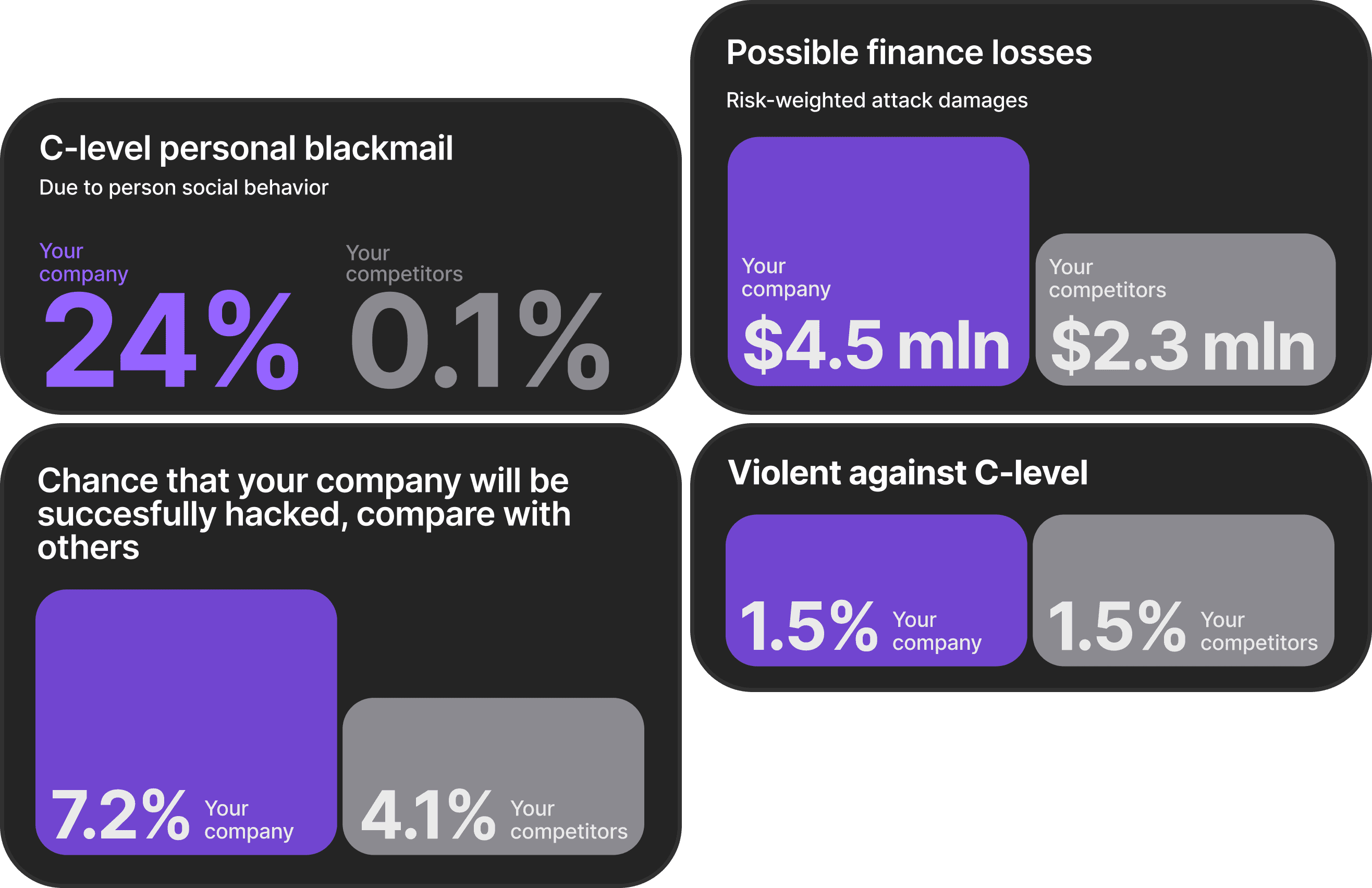

Organizations face a strategic decision: invest now in defenses against an emerging threat that currently represents under 5% of attacks, or wait until it becomes more prevalent and potentially find themselves unprepared. The answer likely depends on your organization's risk profile, with finance, legal, and executive teams facing disproportionate risk from sophisticated, personalized attacks.

What Data Are Attackers Using to Personalize Spear Phishing Campaigns?

Attackers build detailed profiles of their targets using information most of us don't think twice about sharing.

LinkedIn profiles are a goldmine:

Job title and reporting structure

Current projects and skills

Professional connections

Posts about work trips or conferences

Attackers use all of it. They know who your boss is, who you work with, and what you're working on.

Social media posts reveal:

Personal interests and family information

Vacation plans and daily routines

Recent activities that build rapport

Times when you're busy and distracted

An attacker might reference your recent vacation to build rapport, or time their attack for when they know you're busy and distracted.

Company websites and press releases provide context about business deals, new hires, and strategic initiatives. An attacker can reference a real project mentioned in a press release to make their fake email more convincing.

Data breaches expose your email address, phone number, passwords (even old ones), and sometimes much more. Attackers use this to make emails feel more urgent or to actually break into your accounts.

Professional databases and business directories list contact information, organizational charts, and role responsibilities. Attackers don't have to guess who handles invoices or who approves wire transfers. They can look it up.

The concerning part? Gathering this information is completely legal and takes minutes. Attackers use the same OSINT techniques that journalists, investigators, and recruiters use every day.

How Has Spear Phishing Evolved in 2025?

Attackers aren't limiting themselves to email anymore. A modern attack might:

Start with an email

Follow up with a text message

Include a phone call

Use QR codes to bypass email filters

This multi-channel approach increases the chances that you'll respond through at least one method.

Voice cloning technology continues advancing, though with caveats about quality and effort required. Attackers can take audio from conference calls, earnings announcements, or videos posted on company websites to train AI voice models. While basic voice cloning can work with 10-30 seconds of audio, truly convincing clones that can survive scrutiny in high-stakes social engineering typically require more substantial audio samples.

Polymorphic campaigns change constantly to avoid detection. Traditional email filters look for patterns. If they see the same malicious email sent to multiple people, they can block it. AI-generated attacks create thousands of unique versions, so filters can't spot the pattern as easily.

Business email compromise (BEC) attacks have gotten more sophisticated. These attacks target financial transactions by impersonating executives or vendors. With AI, attackers can study email threads, learn communication styles, and insert themselves into ongoing conversations about real invoices or wire transfers.

Why Is Traditional Security Awareness Training No Longer Sufficient?

Many companies conduct annual or quarterly security training. Employees sit through a presentation, maybe take a quiz, and that's it for another year. Research suggests this approach faces significant challenges with modern threats.

Industry data shows wide variation in training effectiveness, with baseline failure rates ranging from as low as 3.6% in some sectors to over 30% in organizations without consistent training. What's often cited as a "20%" failure rate actually represents specific pre-training baselines from particular platforms rather than a universal standard.

The reporting challenge is equally inconsistent. Some platforms report baseline reporting rates as low as 7% before implementing modern training, while others show average industry reporting rates of 18.65%, with financial services achieving 32.35%. These variations reflect differences in organizational culture, existing security awareness, and training program maturity.

Here's the fundamental challenge: traditional training often teaches people to look for obvious red flags—misspellings, urgent language, generic greetings, suspicious links. While these indicators still appear in many attacks, AI-generated phishing removes these obvious tells, making detection harder.

Static training also struggles to keep pace with constantly evolving threats. By the time annual training rolls around again, attackers have developed new techniques.

The one-size-fits-all approach ignores that different employees face different risks:

Finance teams need to recognize invoice fraud

HR teams need to watch for fake job applicants harvesting data

Executives need to worry about impersonation and BEC

Generic training doesn't address these specific threats effectively.

What Is Adaptive, AI-Powered Security Training?

Modern training platforms take a different approach. Instead of annual presentations, employees receive short, frequent training moments. Think five-minute lessons several times a month instead of a two-hour session once a year.

Advanced simulations are personalized based on actual risk profiles. Modern platforms can scan publicly available information about employees' digital footprints to understand what data about them is accessible to attackers. Then they create phishing simulations using similar information, mirroring how real attackers operate.

For example, if an employee's LinkedIn profile mentions they just started managing a project, the training system might send them a simulated phishing email pretending to be from a project vendor. It's realistic because it's based on real, publicly available information about them.

These platforms track how each person responds and adjust difficulty over time. Someone who consistently spots phishing attempts gets harder simulations. Someone who struggles gets more foundational training and additional support. This adaptive approach meets people where they are.

Research from organizations using modern adaptive training approaches shows promising results, though it's important to note these often come from vendor-reported data rather than independent studies:

Failure rates reportedly drop to 3.2% to 4.93% (compared to baseline rates of 17.8% to 33%)

Reporting rates can increase to 60% after a year of consistent training (compared to baseline rates of 7% to 18.65%)

Organizations report that 64% of employees successfully identified and reported real threats after sustained adaptive training

When you consider that data breaches cost an average of $4.88 million (across all breach types) and phishing-specific breaches cost around $4.8 million, even modest reductions in breach likelihood represent substantial savings.

How Does OSINT Play a Role in Modern Phishing Simulations?

This is where modern training approaches differ significantly. The most effective defense against attackers who use OSINT is training that also uses OSINT.

Traditional simulations might send generic messages: "Your account will be suspended unless you click here." Employees who've done any security training spot these immediately. They pass the test and learn nothing meaningful.

Modern OSINT-powered simulations work differently. They scan employees' public digital footprints first:

Find their LinkedIn profiles

Check their social media presence

Review publicly accessible professional information

Identify any data breaches containing their information

They build a profile of what attackers could discover, then create simulations based on that real information.

For example, if the scan finds an employee recently posted about attending a cybersecurity conference, the simulation might be a follow-up email from someone they "met" there, asking them to review a document. That's realistic. That's something that could actually deceive them. When they encounter it (whether they fall for it or successfully identify it), they learn something valuable about their actual vulnerability.

OSINT-powered training shows employees their actual attack surface. Many people don't realize how much information about them is publicly available. Seeing a summary of their exposed data—email addresses in breaches, social media accounts, professional information—often serves as a powerful wake-up call.

The Critical Role of Technical Controls Alongside Training

While training is essential, it represents only one layer of a comprehensive phishing defense strategy. Technical controls provide foundational protection that catches many attacks before they reach employees.

Email Authentication Protocols

Organizations should implement the three pillars of email authentication:

SPF (Sender Policy Framework): Specifies which IP addresses and servers are authorized to send email on your domain's behalf

DKIM (DomainKeys Identified Mail): Uses digital signatures to verify email integrity and authenticity

DMARC (Domain-based Message Authentication, Reporting and Conformance): Combines SPF and DKIM to verify sender identity and instructs receiving servers how to handle authentication failure

Implementing all three protocols together prevents domain spoofing and significantly reduces the likelihood that phishing emails impersonating your organization will reach targets. The more organizations implement DMARC, the more effective it becomes across the email ecosystem.

Advanced Email Filtering

Modern email security solutions go beyond simple spam filters. They employ:

Machine learning-based threat detection that identifies suspicious patterns

Sandboxing that analyzes attachments and links in isolated environments

Real-time threat intelligence that blocks known malicious domains and sender addresse

Content analysis that flags emails with urgent language, suspicious requests, or anomalous sender behavior

These systems catch many phishing attempts before employees ever see them, reducing the burden on human vigilance.

Multi-Factor Authentication (MFA)

Implementing MFA provides critical protection even when credentials are compromised. Research shows that MFA prevents approximately 96% of bulk phishing attacks and 100% of automated attacks.

However, not all MFA is equally resistant to phishing. Traditional MFA methods like SMS codes or email-based one-time passwords can themselves be phished. Phishing-resistant MFA methods include:

Hardware security keys (like YubiKey)

Biometric authentication (fingerprint or facial recognition)

Push notification approvals on registered devices

Passkeys (cryptographic credentials stored on devices)

These methods are significantly harder for attackers to compromise even with sophisticated phishing techniques.

Endpoint Security and Privilege Management

Additional technical controls include:

Restricting administrative privileges to limit damage if accounts are compromised

Endpoint detection and response (EDR) solutions that identify suspicious behavior on devices

Regular software updates and patch management to close vulnerabilities

Secure web gateways that block access to malicious sites

The most effective security strategies combine these technical controls with ongoing training. Technical solutions catch many attacks, but determined attackers will eventually get through. When they do, trained employees who recognize something is wrong and report it promptly serve as the critical last line of defense.

How Brightside AI Transforms Spear Phishing Training with OSINT-Powered Simulations

Here's the challenge most organizations face: attackers research their targets thoroughly before attacking. They know what information is exposed about each employee and exactly how to exploit it. Meanwhile, many organizations send generic phishing simulations that look nothing like real attacks.

Brightside AI addresses this gap by scanning each employee's digital footprint across multiple categories including personal information (emails, phone numbers), data leaks (compromised passwords, credentials exposed in breaches), online services (LinkedIn, Netflix, dating sites, registered accounts), personal interests, social connections, and location data

This scan reveals exactly what attackers can see about each individual.

For each employee, security teams receive vulnerability scores showing risk levels. The platform displays aggregate data and risk metrics while protecting individual privacy in how detailed personal information is accessed and displayed.

With this OSINT data, Brightside AI creates training simulations that mirror real attack patterns. These aren't generic templates—they're AI-generated spear phishing emails personalized to each individual based on their actual digital exposure:

If an employee's LinkedIn shows they work in finance, they might receive simulations about urgent invoices

If their professional profiles mention specific projects, simulations might reference those initiatives

The platform extends beyond email to include voice phishing simulations using AI-generated phone calls that test whether employees can recognize social engineering over the phone. It also prepares teams for video and audio manipulation tactics through deepfake awareness training.

Employees learn through an AI assistant called Brighty. Instead of passive slide presentations, they engage in conversations through a chat interface. The training includes:

Interactive games and challenges

Achievement badges for motivation

Topics from basic phishing recognition to advanced deepfake identification

When employees see exposed data about themselves in their dashboard, they can access guidance from Brighty on exactly how to secure it.

For administrators, the platform tracks progress over time. You can:

Monitor which employees are improving

Identify who needs additional support

Track how overall team vulnerability changes as training progresses

Assign courses individually or to entire teams

Deploy simulation campaigns using pre-made templates or AI-generated scenarios

What makes this approach valuable is the realism. Employees aren't just learning to spot obvious fake emails. They're facing tactics and personalization similar to what real attackers would use. They're building practical resilience, not just checking a compliance box.

What Do Security Leaders Say About AI Phishing?

Opinions among security professionals reflect the complexity of assessing this evolving threat.

Some leaders emphasize AI as a significant emerging threat requiring immediate attention. They point to the rapid improvement in AI capabilities and the increases in attack volume. Pyry Åvist, CTO of Hoxhunt, notes "AI is fueling a new era of social engineering tactics, but it can also be the white hat that helps us fight back". This perspective advocates for proactive investment in AI-powered defenses and modern training approaches.

Others urge careful evaluation of threat scale. They observe that while AI-written phishing is growing, it still represents a small fraction of successful attacks—under 5% of phishing emails that bypass filters in 2024 were definitively AI-generated. These leaders caution against panic-driven spending on solutions that may not address the actual threats most organizations face today.

A third perspective sees AI as both a threat and an opportunity. Yes, attackers have powerful new tools. But defenders do too:

AI can analyze employee behavior patterns

Personalize training content to individual risk profiles

Identify vulnerable individuals who need additional support

Adapt simulations in real-time based on performance

This view holds that organizations shouldn't fear AI technology itself, but should harness it strategically.

Industry analysts warn that "attackers now have near-limitless creative power, and they are using it to outthink traditional security measures". Law enforcement agencies including the FBI urge companies to "adopt multiple technical and training measures" to counter the speed, scale, and automation AI brings to phishing.

Most experts agree on one principle: the gap between attackers and defenders is narrowing, and defenders should act thoughtfully but deliberately.

Putting the AI Phishing Threat in Perspective

This debate affects how organizations allocate limited security budgets, so perspective matters.

The current reality: AI-powered attacks still represent under 5% of successful phishing attacks that bypass email filters. The vast majority of breaches still result from basic phishing, unpatched software, misconfigurations, and other fundamental security issues. Focusing exclusively on AI might distract from core security practices that prevent most incidents.

The forward-looking view: while AI phishing is currently a small percentage of attacks, that percentage is growing significantly. Organizations that wait until AI phishing becomes the dominant threat may find themselves years behind in preparedness when it matters most.s

There's also the target segmentation factor. AI isn't replacing all phishing—it's being deployed strategically against high-value targets where return justifies effort. Your average employee might still encounter traditional phishing, but your CFO, legal team, and executives increasingly face AI-powered attacks designed specifically for them.

The balanced approach: organizations should address fundamental security hygiene first—email authentication protocols (SPF, DKIM, DMARC), phishing-resistant MFA, advanced email filtering, and access controls. These technical controls provide baseline protection regardless of whether attacks are AI-generated or traditional. Simultaneously, security awareness training should evolve to address more sophisticated, personalized threats that will increasingly penetrate technical defenses.

Waiting until AI phishing becomes the majority of attacks means playing catch-up when you're already compromised. Acting now doesn't require panic or unlimited spending—it requires thoughtful integration of modern training approaches with strong technical controls.

Start your free risk assessment

Our OSINT engine will reveal what adversaries can discover and leverage for phishing attacks.

What Actions Should Your Organization Take Today?

Start by assessing your current security posture holistically. Evaluate both your technical controls and training approaches:

Technical Foundation:

Implement SPF, DKIM, and DMARC email authentication

Deploy advanced email filtering with threat intelligence

Require phishing-resistant MFA for all accounts, especially privileged access

Maintain updated endpoint protection and patch management

Training Assessment:

When was the last time you updated your security awareness program?

Are you conducting annual or quarterly sessions?

Are your simulations realistic enough to teach meaningful skills?

Do you measure actual behavior change or just completion rates?

Understand your attack surface through OSINT. What information about your employees and organization is publicly available? Run scans to see what attackers can find. Many organizations are surprised to discover how much data is exposed.

Move from compliance-based training to behavior-based training. Stop thinking about training as checking a box and start thinking about it as building practical skills. Research suggests short, frequent, realistic simulations produce better outcomes than annual presentations.

Create a culture where reporting suspicious activity is encouraged and rewarded. If employees fear punishment for clicking a phishing link, they won't report when they make mistakes. That means security teams don't know about breaches until it's too late. Make reporting easy, respond quickly, and thank people who report.

Measure what matters. Track these key metrics:

Click rates on simulations

Reporting rates

Time to report

Whether employees report real threats (not just simulations)

Improvement in these metrics indicates your program is building real resilience.

Consider platforms that use AI to improve your defenses, not just improve attackers' capabilities. OSINT-powered simulations, adaptive training paths, and personalized learning represent current best practices in security awareness, though organizations should seek independent validation of vendor claims where possible.

Get leadership involved. Security awareness works best when executives participate in training, model good behavior, and make clear that security is everyone's responsibility.

The Bottom Line

AI-powered spear phishing represents a real and growing threat, though currently comprising under 5% of successful phishing attacks. The technology enabling these attacks is improving rapidly and becoming more accessible. Traditional defenses and training methods face new challenges.

But this isn't a reason for panic. It's a catalyst for thoughtful action. The same AI technologies attackers use can strengthen defenses when properly deployed. Modern training platforms create realistic simulations, adapt to individual needs, and produce measurable improvements in employee awareness and behavior.

Your employees remain a critical defense layer when properly trained and supported by strong technical controls. Email authentication protocols, advanced filtering, and phishing-resistant MFA will catch many attacks. But determined attackers will eventually penetrate technical defenses. When they do, you need people who can recognize anomalies and report them before significant damage occurs.

The question isn't whether to invest in better security—it's how to invest strategically. Technical controls provide foundational protection. Modern training builds human resilience against attacks that bypass those controls. Together, they create defense in depth.

Start with understanding what attackers already know about your organization and your people. Implement robust technical controls that prevent most attacks from reaching employees. Then build training that prepares people for the sophisticated, personalized threats that will inevitably get through. Make it continuous, make it realistic, and make it engaging. Track your progress and adjust based on results.

The AI arms race in cybersecurity will only intensify. Organizations that treat security as a strategic priority—combining strong technical defenses with thoughtful human risk management—will be best positioned to meet evolving threats, whether AI-powered or traditional.