Back to blog

Employee Deepfake Training: Detect Fake Audio & Video

Written by

Brightside Team

Published on

Oct 15, 2025

A finance worker in Hong Kong joined what seemed like a routine video conference. The CFO was there. Several colleagues joined from different locations. Everyone looked and sounded normal. The CFO requested an urgent wire transfer of $25 million. The finance worker, seeing familiar faces and hearing familiar voices, approved the transaction.

Every person on that call was fake. Sophisticated deepfake technology created an entire video conference of artificial humans. The $25 million disappeared across international borders within hours.

This happened in February 2024, and it represents the new reality of business fraud. We're not talking about poorly edited videos or robotic voices anymore. Modern deepfakes can fool even experienced professionals during live video calls. The technology has reached a point where seeing and hearing someone is no longer proof that they're real.

Your employees probably know about phishing emails. They might even spot suspicious links and fake login pages. But do they know that the video call with your CEO could be completely fabricated? Can they identify when the urgent phone call from your CFO is actually AI-generated audio?

This guide will show you exactly how to prepare your team for deepfake attacks. You'll learn what these threats actually look like, how to build verification systems that work, and why traditional training approaches fail against this sophisticated fraud. By the end, you'll understand how to create an adaptive security culture where employees think critically about every communication, regardless of how convincing it appears.

Why Are Deepfakes the Biggest Threat Your Business Faces in 2025?

What Makes Deepfake Attacks Different from Traditional Fraud?

Think about how trust works in business communication. When someone emails you, there's natural skepticism. Email is text on a screen. It's easy to fake. You've been trained to question emails.

But when you see someone's face on a video call? When you hear their actual voice on the phone? That triggers completely different psychological responses. Your brain says "this is real" because for your entire life, seeing and hearing someone meant they were actually there.

Attackers understand this psychology perfectly. They've moved beyond text-based deception to multimedia manipulation. The shift changes everything about how fraud works.

Creating a deepfake used to require technical skills, expensive software, and significant time investment. Not anymore. Current AI tools can clone a voice using just 30 seconds to 5 minutes of audio samples. Video deepfakes that once took weeks can now be generated in hours or even minutes.

Even more concerning is where attackers source the raw material. Every time your CEO speaks at a conference, that's training data. Every earnings call recording online gives attackers voice samples. LinkedIn videos, company podcasts, YouTube interviews... all of this provides exactly what's needed to create convincing impersonations.

The technology has become accessible too. Deepfake tools are available on both legitimate platforms and dark web marketplaces. Some require minimal technical knowledge. You don't need to be a hacker to create a convincing fake anymore. You just need to know where to look.

How Much Money Are Companies Losing to Deepfake Fraud?

The financial impact is staggering and growing rapidly. Let's look at specific cases that demonstrate what's at stake.

The Hong Kong case we opened with represents the largest known deepfake fraud. $25 million stolen through a single video conference. The finance worker had no reason to suspect anything wrong. Multiple "colleagues" participated. The interaction seemed normal. Only after the transfer completed and couldn't be recovered did the truth emerge.

In March 2025, Singapore experienced a similar attack. A finance director received a video call that appeared to show senior leadership requesting an urgent transfer. The deepfake was convincing enough that $499,000 moved before anyone questioned the legitimacy. The attackers had studied the company's communication patterns and organizational structure to make the scenario believable.

Voice cloning attacks have proven equally effective. The UK energy company case from 2019 showed what was possible even with earlier technology. Attackers cloned the CEO's voice and convinced an employee to transfer $243,000. Since then, voice cloning has become dramatically more sophisticated.

In 2025, criminals used deepfake voice technology to impersonate the Italian Defense Minister, nearly stealing €1 million. The attack failed only because verification protocols caught the fraud before money moved.

The FBI reported that business email compromise resulted in $2.8 billion in losses during 2024. An increasing percentage of these attacks now include synthetic voice or video elements. The average incident involving deepfakes ranges from $150,000 to $500,000.

Recovery rates are essentially zero. Once money moves internationally through cryptocurrency or shell companies, it's gone. Insurance might cover some losses, but reputation damage and regulatory consequences extend far beyond the immediate financial hit.

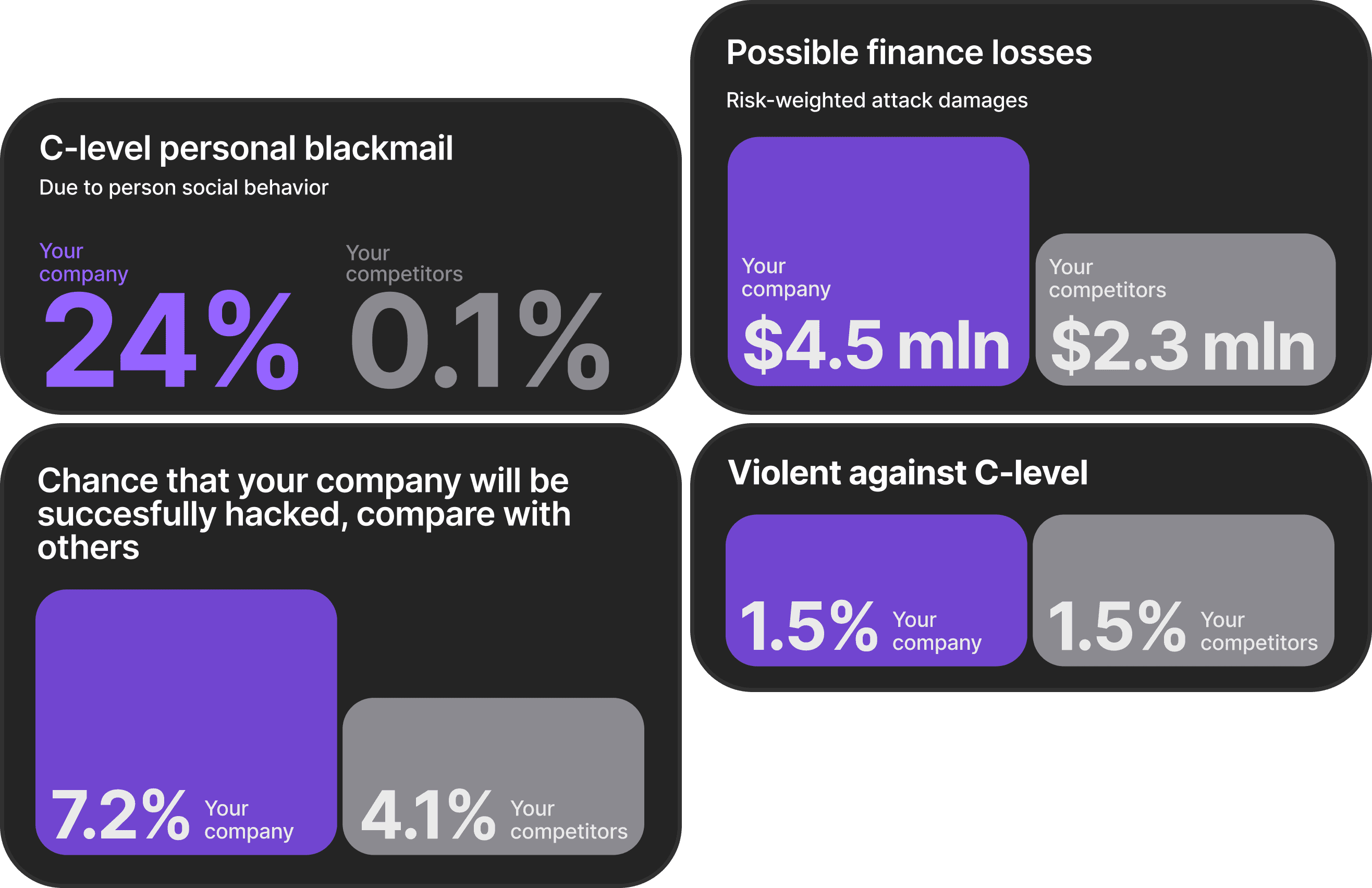

Which Departments and Roles Are Most Vulnerable?

Deepfake attacks target specific people within organizations. Understanding who is most vulnerable helps focus training and protection efforts.

Finance teams represent the primary target. Anyone who can authorize wire transfers or approve payment changes is at risk. Attackers study organizational hierarchies to understand approval workflows. They target people with authority but not enough seniority to question urgent requests from leadership.

HR departments face unique vulnerabilities. Deepfakes can manipulate payroll information, create fake recruitment scenarios, or social engineer access to employee data. A convincing video call from an executive asking to expedite hiring or change payment details can bypass normal verification steps.

Executive assistants have access to sensitive information and direct lines to leadership. They're positioned to approve calendar changes, grant access, or facilitate communications that attackers can exploit. Their role requires responsiveness, which attackers use against them by creating false urgency.

IT and security teams become targets when attackers need access credentials or want to disable security controls. A deepfake call from the CTO requesting emergency access "due to a critical issue" might convince a help desk employee to bypass authentication protocols.

Customer service representatives can be manipulated to change account information, reset passwords, or provide sensitive customer data. Deepfakes that impersonate account holders make social engineering attacks much more convincing.

The common thread? These roles combine decision-making authority with pressure to act quickly. Attackers exploit this combination by creating scenarios where normal verification would seem to cause unacceptable delays.

What Do Deepfake Videos Actually Look Like?

Can You Really Tell the Difference Between Real and Fake?

You probably can't consistently identify high-quality deepfakes just by looking or listening. The technology has improved so dramatically that even trained security professionals struggle with detection.

Early deepfakes had obvious problems. Faces flickered. Voices sounded robotic. Movements looked unnatural. People could spot them with basic attention. Those days are over.

Modern deepfakes blend seamlessly with real video. The faces move naturally. The voices capture emotional nuance. The synchronization between audio and video is essentially perfect. When you're in a stressful work situation dealing with an urgent request, your ability to spot subtle anomalies drops even further.

This doesn't mean detection is impossible. It means relying solely on your eyes and ears is a losing strategy. Effective protection requires verification protocols that don't depend on spotting technical flaws.

Think of it like this: you might notice something feels slightly off. But "slightly off" isn't enough when your CFO is requesting an urgent transfer on a video call. You need concrete verification steps that work regardless of how good the fake looks.

What Are the Visual Red Flags in Deepfake Videos?

While perfect detection is unrealistic, understanding common deepfake artifacts helps employees develop healthy skepticism. Here are the visual indicators that might reveal artificial content.

Watch for unnatural blinking patterns. Real people blink regularly and unconsciously. Early deepfake systems struggled with this, creating videos where people rarely blinked or blinked in strange patterns. Modern deepfakes have improved, but irregular blinking remains a potential indicator.

Examine eye movements and gaze direction. Natural eye movements track objects and shift focus smoothly. Deepfakes sometimes produce eyes that stare unnaturally or don't quite track properly with head movements. If someone seems to be looking through you rather than at you, pay attention.

Check facial expressions against emotional context. Does someone's face match what they're saying? Deepfakes can struggle with complex emotional expressions, especially rapid transitions between emotions. A person delivering bad news with an expression that doesn't quite fit might be artificial.

Look at lighting consistency. Lighting on a person's face should match their environment. Shadows that don't align with apparent light sources, or skin tones that seem inconsistent with background lighting, can indicate manipulation. Deepfakes sometimes show lighting that remains unchanged as people move.

Notice edge artifacts. Where the person meets the background, you might see subtle blurring or color bleeding. This is especially noticeable around hair, which has complex detail that's difficult for AI to recreate perfectly.

Watch for unnatural movements. Body movements should flow naturally with head position and speech. Deepfakes that focus on face manipulation might show disconnection between head and body movements, or transitions that seem slightly jerky.

The challenge is that all of these indicators can have innocent explanations. Poor lighting, bad webcams, and network issues create similar artifacts. That's why visual detection alone can't protect you. These signs should trigger additional verification, not serve as definitive proof.

How Can You Identify Cloned Voice and Audio Deepfakes?

Voice cloning has become frighteningly sophisticated. AI can analyze voice samples and recreate speech patterns, tone, and even emotional inflections. But there are still indicators that might reveal artificial audio.

Listen for emotional flatness. While modern voice clones capture basic tone, they sometimes struggle with genuine emotion. Speech might sound slightly mechanical or lack the natural emotional variation real people show. The words are right, but something about the delivery feels off.

Notice breathing patterns. Real people breathe while speaking. You hear subtle intake of breath between sentences or during pauses. Some voice clones lack these natural breathing sounds or place them awkwardly.

Check for pronunciation oddities. Even sophisticated voice clones occasionally mispronounce words or struggle with complex names. If someone who normally speaks clearly stumbles over words they use regularly, that's suspicious.

Watch for unnatural pauses. Real speech has rhythm. People pause to think, for emphasis, or to breathe. Artificial speech sometimes places pauses in odd locations or maintains too consistent of a pace.

Listen to background noise. Real phone calls have ambient sound. Office noise, street sounds, or the acoustic character of the space someone is in. Voice clones sometimes have too-clean audio with no background noise, or background noise that seems disconnected from the supposed environment.

More important than any technical indicator is context. Why is this communication happening? Does the request match normal patterns? Would this person really call you directly for this specific issue?

How Do Verification Protocols Protect Against Sophisticated Deepfakes?

The most effective defense against deepfakes isn't better detection. It's verification systems that work regardless of how convincing the fake appears. This represents a fundamental shift in security thinking toward adaptive security approaches that account for evolving threats.

What Should Employees Do When They Suspect a Deepfake?

Create clear protocols that employees can follow the moment they feel suspicious. These need to be simple enough to remember under pressure but thorough enough to catch sophisticated fraud.

Step one is to pause. Don't proceed with any requested action while you're verifying. Professional courtesy might make you want to comply immediately, but protecting company assets takes priority. Legitimate requests will still be legitimate after verification.

End the communication professionally. Don't tip off potential fraudsters by saying "I think you're a deepfake." Simply indicate you need to check something or will call back shortly. This preserves evidence and prevents attackers from adapting their approach.

Verify through a completely separate channel. This is the critical step. If someone called you, don't call them back at the number they provided. Look up their number independently and call that. If they video conferenced, reach out through your company's messaging system or call their known phone number.

Document everything immediately. Write down what was requested, how the communication occurred, and what felt suspicious. If possible, record or screenshot the interaction. This information is valuable whether the communication was legitimate or fraudulent.

Report to your security team right away. Don't feel embarrassed about reporting a suspicious communication that turns out to be real. Security teams would much rather investigate false alarms than clean up after successful fraud. Organizations need to build cultures where reporting is rewarded, not punished.

Why Do Businesses Need Multi-Channel Verification for Sensitive Requests?

The term "out-of-band verification" sounds technical, but the concept is simple: confirm important requests through a different communication method than the one used to make the request.

If someone calls requesting a wire transfer, confirm via email and in-person approval before executing. If someone emails asking for password resets, call them at their known number before proceeding. If someone video conferences requesting system access, verify through your ticketing system before granting it.

This works because attackers typically compromise one communication channel at a time. Creating a convincing deepfake video call is possible. Simultaneously compromising the person's email, their phone, and your internal messaging system is exponentially harder.

For financial transactions, implement multi-tier verification that escalates with dollar amounts. Transfers under $10,000 might require dual approval through your financial system. Transfers over that threshold add a confirmation phone call to a known number. Transfers over $100,000 require in-person or authenticated digital signature approval.

For access and credentials, require verification through your established ticketing or request systems. Even if the CTO calls requesting emergency access, the request should be logged in your system and confirmed through at least one additional channel.

For HR and payroll changes, confirm through employee self-service portals where individuals must authenticate before making changes. Phone or email requests should trigger secondary verification before any changes are implemented.

The key is making these verification steps standard procedure, not emergency measures. When verification is always required, employees don't have to decide if a situation warrants extra caution. The protocol makes that decision.

Building an Effective Adaptive Security Training Program

Traditional security training shows employees generic examples and hopes they remember the lessons when real threats appear. This approach doesn't work well for rapidly evolving threats like deepfakes. Adaptive security requires training that evolves with the threat landscape and personalizes to each employee's specific vulnerabilities.

Why Do Simulations Work Better Than Lectures?

You can watch a presentation about spotting deepfakes. You can read articles and take quizzes. But until you actually experience a convincing deepfake targeting you specifically, you won't fully grasp how difficult detection is.

Simulation-based training puts employees in realistic scenarios where they must make actual decisions. A phone call comes in from someone who sounds exactly like your CEO. The voice inflection is right. The speaking pattern matches. They're asking you to do something urgent but not completely outside normal bounds. What do you do?

This type of training reveals which employees follow verification protocols and which ones shortcut the process under pressure. It builds muscle memory for the pause-and-verify response that defeats deepfake attacks. Most importantly, it demonstrates that even intelligent, careful people can be fooled.

The feedback is immediate and specific. When an employee clicks a link in a simulated phishing email or complies with a deepfake voice request, they see exactly what they did and why it was problematic. This creates learning moments that stick far better than generic training content.

Organizations implementing realistic deepfake simulations report significant improvement in verification behavior. Employees who have experienced convincing simulations become more cautious with real communications. They understand viscerally that they can't trust audio and video alone.

How Does Digital Footprint Awareness Prevent Deepfake Attacks?

Here's something many organizations miss: the quality of deepfake attacks directly correlates with how much information attackers can gather about their targets. The more an attacker knows about your CEO's voice, appearance, speech patterns, and communication style, the more convincing their deepfake becomes.

This is where OSINT (Open-Source Intelligence) becomes critical. Everything publicly available about your executives and employees is potential ammunition for deepfake creation. LinkedIn profiles show organizational relationships. Conference presentations provide voice and video samples. Company videos demonstrate communication styles. Social media reveals personality traits.

Attackers systematically gather this information before launching attacks. They analyze earnings calls to clone CEO voices. They study video interviews to understand facial mannerisms. They map organizational charts to identify who reports to whom and how approvals flow.

Understanding your digital exposure allows proactive risk reduction. Limiting publicly available audio and video of key executives makes voice and video cloning more difficult. Controlling what information is shared about organizational structure makes targeting decisions harder for attackers.

Platforms like Brightside AI address this by conducting comprehensive OSINT assessments that map what information about executives and employees is publicly accessible. This visibility enables targeted risk mitigation. If your CFO has extensive video content online, you know they're a higher priority for deepfake attacks and can implement additional protections.

The assessment reveals not just what's exposed, but what combination of exposures creates risk. An executive whose voice samples are online, whose organizational position is public, and whose communication patterns are visible through social media creates a perfect target profile. Identifying these high-risk combinations allows strategic protective measures.

How Technology and Human Awareness Work Together in Adaptive Security

Fighting deepfakes requires both technical tools and trained humans. Neither approach works well alone. The goal is creating adaptive security systems where technology and people complement each other's strengths.

What Role Do AI-Powered Training Platforms Play?

Modern security training platforms do more than deliver generic content. They create personalized learning experiences based on individual vulnerabilities and provide real-time support when employees need it.

Consider how adaptive security training works in practice. Instead of showing everyone the same simulated phishing email, the system creates personalized scenarios. A finance employee receives simulations targeting their specific role and authority level. An HR manager sees scenarios relevant to payroll and recruitment. Each simulation reflects the actual threats that person would face.

For deepfake training specifically, this personalization becomes even more critical. Organizations need training simulations that reflect how attackers would actually target their specific environment. This requires creating custom deepfakes using publicly available information about the organization and its leadership. Brightside AI's approach demonstrates this flexibility. The platform can create sophisticated deepfake simulations using OSINT data gathered from your actual digital footprint, or work with specific scenarios and materials you provide.

This matters because generic deepfake training doesn't prepare employees for attacks that exploit your specific vulnerabilities. If your CFO never appears in public videos, training your team to spot video deepfakes of the CFO is less valuable than training them on voice clones, which would be the more likely attack vector given what information is actually available.

How Do You Balance Security with Operational Efficiency?

The biggest pushback against verification protocols is that they slow things down. In competitive business environments, speed matters. How do you implement robust deepfake defenses without creating bureaucratic nightmares?

The answer is risk-based adaptive security approaches. Not every communication needs the same level of verification. A colleague asking when the next meeting is doesn't require multi-channel confirmation. A CFO requesting a $250,000 wire transfer does.

Create verification tiers based on risk:

Low-risk communications (routine questions, scheduling, information sharing) proceed normally without additional verification. These represent the vast majority of business communications.

Medium-risk actions (system access requests, data access, non-financial authorization) require one additional verification step. Typically this means confirming through a secondary channel before proceeding.

High-risk transactions (financial transfers, sensitive data changes, major system modifications) demand multi-channel verification regardless of how legitimate the request appears. This might involve email confirmation plus phone callback plus manager approval.

The key is making the risk assessment automatic. Employees shouldn't have to decide if something is risky enough to verify. The protocol makes that determination based on the action being requested.

Technology helps here too. Systems that automatically require dual authorization for transactions above certain thresholds remove the decision from individuals. Financial platforms that send confirmation requests to multiple parties create verification without adding manual steps. Authentication systems that detect unusual access patterns trigger additional verification automatically.

What Are Your Organization's Next Steps?

Understanding deepfake threats is the first step. Taking action to protect your organization is what actually matters. Here's how to move from awareness to implementation.

How Do You Assess Your Current Vulnerability?

Start by understanding what attackers would see when they research your organization. Conduct an executive exposure assessment. What audio and video content featuring your leadership is publicly available? Where could someone source voice samples of your CEO? What videos show your CFO speaking? These materials are exactly what attackers need to create convincing deepfakes.

Examine your communication and approval processes. Which workflows rely solely on voice or video verification? Where do urgent requests bypass normal controls? What dollar thresholds exist before additional approval is required? Map out your vulnerability points.

Survey your employees about their current awareness. Do they know what deepfakes are? Can they identify indicators? Do they know your verification protocols? Would they feel comfortable questioning an urgent request from leadership? The answers reveal where training is needed most.

Review your technology stack. What detection capabilities exist in your current security tools? Do your communication platforms offer authentication features you're not using? Where are the gaps in your technical defenses?

Platforms like Brightside AI streamline this assessment process by automating the digital footprint analysis. Instead of manually searching for exposed information about your executives and employees, the OSINT-powered system maps the landscape systematically. You get a clear picture of who is most exposed and what specific information attackers could weaponize.

Top 5 Cybersecurity Platforms for Deepfakes

Modern deepfake phishing and AI-powered social engineering attacks are reshaping the cybersecurity landscape, making specialized awareness training platforms more important than ever. Below are the top five deepfake awareness training platforms for 2025: Brightside AI, Adaptive Security, Jericho Security, Hoxhunt, and Revel8. Each platform brings innovative features, realistic simulations, and industry-specific strengths to help organizations of all sizes keep their people cyber-aware and secure.

Brightside AI

Brightside AI stands out with a Swiss-built, privacy-first security platform designed to safeguard both organizations and individual employees. Leveraging advanced open-source intelligence (OSINT), Brightside maps digital footprints to identify personal and corporate vulnerabilities, reducing the risk of successful deepfake and phishing attacks. The training modules cover email, voice, and video threats with AI-driven simulations that are tailored to each employee's real exposure. Admin dashboards offer extensive vulnerability metrics, completion tracking, and privacy management tools. Gamified learning experiences (including mini-games and achievement badges) make security training memorable, while users benefit from personalized privacy action plans guided by Brighty's AI chatbot. Notably, Brightside's hybrid approach strengthens both personal privacy and corporate security, which is especially impactful for sectors handling sensitive personal data.[^1]

Pros:

Comprehensive OSINT mapping identifies real vulnerabilities across six data categories before attackers can exploit them

Dual-purpose platform protects both corporate infrastructure and individual employee privacy

Gamified training with leaderboards and mini-games drives engagement and retention

Privacy-first Swiss approach with GDPR compliance built in

Personalized AI coaching with Brighty chatbot provides ongoing privacy guidance

Cons:

Smaller market presence compared to established competitors

Limited third-party integrations compared to enterprise-focused platforms

May require cultural adaptation for organizations not prioritizing individual privacy management

Adaptive Security

Adaptive Security leads the field in multichannel deepfake and AI-powered phishing simulations. With extensive coverage across email, voice, video, and SMS, Adaptive Security runs simulations built on open-source data, mimicking exactly how attackers operate in the wild. Its conversational red teaming agents offer hyper-realistic, ever-changing scenarios matched to a company's evolving digital footprint. Adaptive Security distinguishes itself with seamless integration (100+ SaaS connectors), instant onboarding for employees, and board-ready reporting to demonstrate progress and ROI to executives. The platform is particularly strong for large, regulated organizations that need proof of compliance and smart risk assessment alongside agile, multi-vector training coverage.[^2][^3]

Pros:

Multi-channel simulations across email, voice, video, and SMS provide comprehensive coverage

Executive deepfakes and company OSINT create highly personalized, realistic training scenarios

100+ SaaS integrations enable instant provisioning and seamless deployment

Board-ready reporting and compliance dashboards simplify executive communication

AI content creator allows rapid customization of training modules

Cons:

Premium pricing may be prohibitive for smaller organizations

Limited email customization options for HR-branded communications

Complexity of features may require dedicated admin resources for optimization

Screenshot of Adaptive Security's platform highlighting AI-powered deepfake phishing simulations and role-based training features.

Jericho Security

Jericho Security delivers unified, AI-driven simulations and defense, bridging the gap between training, threat detection, and automated remediation. Its platform allows enterprise security teams to run dynamic, hyper-personalized phishing simulations across all channels: email, voice, video, and SMS. By using real attacker techniques, including open-source intelligence and behavioral profiling, Jericho Security provides realistic, adaptive training that responds to both user and threat environment changes. The system ties simulation outcomes directly to role-based dashboards and "human firewall" scores, so organizations always know where their risk is highest. Highly suitable for highly regulated and compliance-heavy industries, Jericho's fully adaptive approach stands out for linking learning, simulation, and detection in a smart loop, building organizational resilience from the inside out.

Pros:

Generative AI creates hyper-personalized spear phishing simulations instead of templates

Unified platform integrates training, threat detection, and autonomous remediation

24-hour custom content turnaround for urgent or time-sensitive threats

Native multilingual support with one-click deployment for global organizations

Cons:

Newer market entrant with less established track record than legacy vendors

May have steeper learning curve for teams accustomed to traditional template-based tools

Premium feature set may exceed requirements for smaller organizations

Hoxhunt

Hoxhunt is recognized for its highly engaging, gamified approach, transforming phishing and deepfake detection into a continuous learning challenge. Employees encounter realistic deepfake simulations that recreate multi-step attacks, such as CEO voice impersonation during a simulated Microsoft Teams video call. Hoxhunt's platform is unique for its consent-first, responsible simulations: every scenario is pre-scripted, brief, and accompanied by instant micro-training if a risky action is taken, keeping learning stress-free and effective. The system includes detailed leaderboards, achievements, and progress metrics designed to keep large, distributed workforces motivated and alert. Real-time campaign analytics give administrators granular visibility into user behavior, with automated follow-up training for those at risk. Hoxhunt is the platform of choice for organizations wanting adaptive, ethical, and measurable behavioral change across all levels.

Pros:

Real-time, user-level feedback after every action builds lasting security reflexes

Micro-training delivered immediately after simulations creates strong retention

Gamification with leaderboards and achievements drives sustained engagement

Personalized training adapts to role, language, performance, and risk profile

Reduces admin burden through automation and adaptive content delivery

Cons:

Micro-training lacks in-depth dive options for users seeking comprehensive education

No visibility into pass/fail rates across user populations within simulations

May require dedicated resources for custom integration workflows in complex environments

Gamified cybersecurity awareness training dashboard from Hoxhunt showing user progress, leaderboard status, streaks, and achievements.

Revel8

Revel8 leverages cutting-edge AI to train employees against the full spectrum of modern cyber threats, with a special focus on deepfakes, voice cloning, and multi-channel attacks. The platform uniquely combines OSINT-driven personalization (adapting simulated attacks based on public company data) with real-time human threat intelligence for up-to-date, relevant scenario design. Gamification is deeply embedded, featuring real-time leaderboards, interactive modules, and points to encourage ongoing participation and learning. Revel8's dashboard includes compliance benchmarking and a proprietary "Human Firewall Index" that quantifies organizational awareness and tracks progress against standards like NIS2 and ISO 27001. This makes it ideal for compliance-driven organizations seeking granular risk insights and measurable improvement.

Pros:

OSINT-driven personalization creates realistic, targeted attack scenarios based on actual company data

Real-time threat intelligence keeps training content current with emerging attack vectors

Human Firewall Index provides quantifiable security culture metrics

GenAI simulation playlists deliver adaptive, multi-channel training experiences

Cons:

Limited market visibility and customer review availability compared to established platforms

German market focus may limit language and regional customization for global enterprises

Smaller integration ecosystem compared to enterprise-focused competitors

Platform | Deepfake Simulation Channels | Gamification | Key Differentiator | Best For |

|---|---|---|---|---|

Brightside AI | Email, Voice, Video | Interactive mini-games, leaderboards | OSINT mapping + integrated privacy management | Privacy-focused organizations, SMB to Enterprise |

Adaptive Security | Email, Voice, Video, SMS | Yes | Multi-channel AI emulation + compliance reporting | Mid-market to Enterprise, regulated sectors |

Jericho Security | Email, Voice, Video, SMS | Human risk scoring | Unified adaptive platform for simulation, detection, remediation | Enterprise, highly regulated industries |

Hoxhunt | Email, Voice, Video | Full platform gamification, leaderboards, badges | Gamified adaptive training + consent-first realism | Mid-market to Enterprise, behavioral change focus |

Revel8 | Email, Voice, Video | Leaderboards, interactive modules, points | Personalized OSINT risk + live threat intel | Compliance-driven SMB to Enterprise |

Start your free risk assessment

Our OSINT engine will reveal what adversaries can discover and leverage for phishing attacks.

What Immediate Actions Protect Against Deepfake Fraud?

You can implement several protections today that significantly reduce your risk:

Establish dual authorization for wire transfers above $10,000. Require that two people approve any transfer over this threshold, and that approval must come through your financial system, not just verbal or email confirmation.

Create callback verification protocols for all financial requests. If anyone requests a money transfer by phone or video, policy requires calling them back at their known number before proceeding. The callback number must come from your internal directory, not from the communication requesting the transfer.

Implement code word systems for executive authentication. When your CEO calls requesting urgent action, they must provide a pre-established code word that verifies their identity. Change these codes regularly and communicate them only through secure channels.

Train your finance and HR teams on deepfake awareness as an immediate priority. These departments are the most likely targets. They need to understand the threat and know the verification protocols before they face a real attack.

Conduct an emergency review of your highest-risk processes. Identify the three or four scenarios where a deepfake could cause the most damage (likely wire transfers, payroll changes, system access grants). Implement additional verification for these specific scenarios immediately.

Deploy incident reporting channels that make it easy and non-threatening for employees to report suspicious communications. Use tools like anonymous reporting forms or dedicated security team channels. Make clear that reporting false alarms is better than missing real threats.

How Do You Build Long-Term Adaptive Security Against Deepfakes?

Immediate protections are important, but comprehensive defense requires strategic implementation over time. Build your deepfake defense program in phases.

Phase one focuses on awareness and basic protocols (months 1-2). Conduct organization-wide training on what deepfakes are and why they matter. Implement your first verification protocols for high-risk transactions. Establish reporting procedures and ensure everyone knows how to use them.

Phase two introduces realistic testing (months 3-4). Begin running deepfake simulations tailored to your organization. These might include voice-cloned phone calls to finance staff or video messages from supposed executives. Track who follows verification protocols and who doesn't. Provide immediate feedback and additional training for those who struggle.

Phase three expands technical capabilities (months 5-6). Implement AI-powered detection tools and real-time assistance platforms. Deploy systems that can analyze suspicious communications and provide guidance to employees at the moment of decision. Reduce your digital footprint exposure by removing unnecessary public information about executives.

Phase four establishes continuous improvement (ongoing). Regular simulation exercises keep skills sharp. Threat intelligence updates ensure training reflects current attack methods. Performance metrics show whether your program is actually reducing risk. The program adapts as deepfake technology evolves.

The most effective programs integrate multiple protective layers. OSINT-powered vulnerability assessment shows what information attackers could exploit. Customized simulation training prepares employees for realistic threats. Real-time AI assistance supports decision-making when suspicious communications arrive. Together, these create adaptive security that evolves with the threat landscape.

Organizations using Brightside AI benefit from this integrated approach. The platform combines comprehensive digital footprint analysis with flexible simulation capabilities. Rather than implementing these capabilities separately, the unified platform creates a cohesive defense program. Whether your organization needs voice phishing simulations based on publicly available executive audio or custom deepfake scenarios tailored to specific departments, the platform adapts to your requirements without requiring you to become deepfake technical experts yourself.

Your Defense Starts With Understanding the Real Threat

The finance worker in Hong Kong who transferred $25 million had every reason to trust what they saw and heard. The technology was sophisticated. The scenario was believable. The pressure was real. They made a decision that seemed entirely reasonable at the time.

That's the terrifying reality of deepfakes. They defeat your natural ability to trust what you see and hear. They exploit the psychological shortcuts that make normal business communication efficient. They turn your organization's speed and responsiveness into vulnerabilities.

But deepfakes aren't magic. They're technology. And technology-enabled threats can be defeated with the right combination of awareness, processes, and tools. Employees who know what to look for become more skeptical. Verification protocols that don't rely on visual or audio identification remain effective regardless of how good the fake is. Systems that provide real-time assistance help people make better decisions under pressure.

The question isn't whether your organization will be targeted. As deepfake technology becomes more accessible, attacks will increase. The question is whether your team will be prepared when it happens. Will your employees pause and verify? Will your processes catch fraud before money moves? Will your organization recover quickly from an attempt, or slowly from a successful attack?

Adaptive security approaches that evolve with emerging threats give you the best chance of staying protected. Start with understanding your exposure. Implement basic verification protocols immediately. Build toward comprehensive training that uses realistic simulations. Deploy technology that supports human decision-making rather than replacing it.

The deepfake threat is real, it's growing, and it's incredibly sophisticated. But organizations that take it seriously and implement layered defenses can protect themselves effectively. Don't wait until you're cleaning up after an incident to build these protections. Start today.